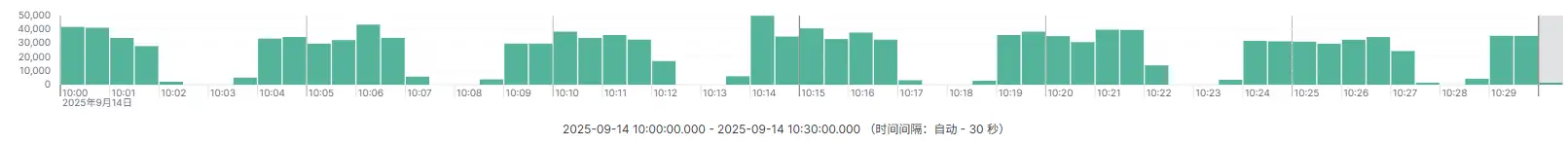

由于线上Web访问日志消息队列使用的是kafka,但是最近日志量猛然增加,通过kibana Discover查看日志,日志流显示断断续续的,很不正常,如下图所示。

既然日志流不正常那得排查啊,首先得从日志采集源头查看,看了半天没有报错日志,那看消息队列层kafka集群,查看指定topic偏移量,才发现LAG值很高。

/usr/local/kafka/bin/kafka-consumer-groups.sh \ --bootstrap-server kafka01.koevn.com:29092,kafka02.koevn.com:29092,kafka03.koevn.com:29092 \ --describe \ --group nginx_logs \ --command-config /usr/local/kafka/config/kraft/client.properties

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-IDnginx_logs nginx_logs 4 86620695 86629105 8410 logstash01-4-031428c4-9904-4ee4-ba59-94a9bd0069da /10.18.6.11 logstash01-4nginx_logs nginx_logs 0 135465030 135496007 30977 logstash01-0-386b12ac-4bfa-46ed-903d-c2e2b02f4ef0 /10.18.6.11 logstash01-0nginx_logs nginx_logs 5 86623919 86632562 8643 logstash01-5-fe357272-3e80-4cd7-961b-6988ecf8a448 /10.18.6.11 logstash01-5nginx_logs nginx_logs 7 86635578 86643137 7559 logstash01-7-49358d2f-5727-4b6d-9332-369a8c2615bf /10.18.6.11 logstash01-7nginx_logs nginx_logs 3 86615556 86627926 12370 logstash01-3-b15d1017-c25c-4d9a-bfb0-ebb99d30cf63 /10.18.6.11 logstash01-3nginx_logs nginx_logs 2 86619855 86629393 9538 logstash01-2-73528037-939d-4458-9bed-6ab1d548ddda /10.18.6.11 logstash01-2nginx_logs nginx_logs 1 86608256 86618297 10041 logstash01-1-af6ecee3-b5bf-448c-b3ba-435b559ea59a /10.18.6.11 logstash01-1nginx_logs nginx_logs 6 86616432 86624058 7626 logstash01-6-26f87af8-6664-4102-8f7e-21d95ec02669 /10.18.6.11 logstash01-6使用

--command-config参数,是kafka集群配置了SSL,执行kafka操作需要指定证书路径

看生产与与消费偏移量误差在几千和几万,结论就是生产消息大于消费消息,消费测性能跟不上,导致消息队列积压,所以形成kibana Discover日志流会出现断断续续的问题,那解决这问题就是增加消费测logstash,配置如下。

#----------- logstash01配置 -----------#input { kafka { bootstrap_servers => "kafka01.koevn.com:29092,kafka02.logs.koevn.com:29092,kafka03.logs.koevn.com:29092" client_id => "logstash01" # client_id别重复 topics => ["nginx_logs"] group_id => "nginx_logs" auto_offset_reset => "latest" partition_assignment_strategy => "org.apache.kafka.clients.consumer.RoundRobinAssignor"

----- 省略 -----

consumer_threads => 4 # 消费线程设置为4 decorate_events => true codec => json }}

#----------- logstash02配置 -----------#input { kafka { bootstrap_servers => "kafka01.koevn.com:29092,kafka02.logs.koevn.com:29092,kafka03.logs.koevn.com:29092" client_id => "logstash02" # client_id别重复 topics => ["nginx_logs"] group_id => "nginx_logs" auto_offset_reset => "latest" partition_assignment_strategy => "org.apache.kafka.clients.consumer.RoundRobinAssignor"

----- 省略 -----

consumer_threads => 4 # 消费线程设置为4 decorate_events => true codec => json }}⚠️ 注意 两个logstash服务之所以把消费线程设置为4,那是kafka topic创建是分了8个分区,若是一个logstash消费线程设置为8就会消费不均导致消息队列积压问题。

修改logstash后自动重载配置,再次查看kafka topic消息偏移量

/usr/local/kafka/bin/kafka-consumer-groups.sh \--bootstrap-server kafka01.koevn.com:29092,kafka02.koevn.com:29092,kafka03.koevn.com:29092 \--describe \--group nginx_logs \--command-config /usr/local/kafka/config/kraft/client.properties

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-IDnginx_logs nginx_logs 0 135931043 135939089 8046 logstash01-0-031428c4-9904-4ee4-ba59-94a9bd0069da /10.18.6.11 logstash01-0nginx_logs nginx_logs 5 86839518 86840775 1257 logstash02-1-386b12ac-4bfa-46ed-903d-c2e2b02f4ef0 /10.18.6.12 logstash02-1nginx_logs nginx_logs 6 86830494 86832283 1789 logstash02-2-fe357272-3e80-4cd7-961b-6988ecf8a448 /10.18.6.12 logstash02-2nginx_logs nginx_logs 7 86850187 86851623 1436 logstash02-3-49358d2f-5727-4b6d-9332-369a8c2615bf /10.18.6.12 logstash02-3nginx_logs nginx_logs 2 86836227 86837963 1736 logstash01-2-b15d1017-c25c-4d9a-bfb0-ebb99d30cf63 /10.18.6.11 logstash01-2nginx_logs nginx_logs 1 86825020 86826211 1191 logstash01-1-73528037-939d-4458-9bed-6ab1d548ddda /10.18.6.11 logstash01-1nginx_logs nginx_logs 3 86836039 86836724 685 logstash01-3-af6ecee3-b5bf-448c-b3ba-435b559ea59a /10.18.6.11 logstash01-3nginx_logs nginx_logs 4 86837450 86838537 1087 logstash02-0-26f87af8-6664-4102-8f7e-21d95ec02669 /10.18.6.12 logstash02-0

/usr/local/kafka/bin/kafka-consumer-groups.sh \--bootstrap-server kafka01.koevn.com:29092,kafka02.koevn.com:29092,kafka03.koevn.com:29092 \--describe \--group nginx_logs \--command-config /usr/local/kafka/config/kraft/client.properties

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-IDnginx_logs nginx_logs 0 135965644 135971223 5579 logstash01-0-031428c4-9904-4ee4-ba59-94a9bd0069da /10.18.6.11 logstash01-0nginx_logs nginx_logs 5 86854489 86856613 2124 logstash02-1-386b12ac-4bfa-46ed-903d-c2e2b02f4ef0 /10.18.6.12 logstash02-1nginx_logs nginx_logs 6 86846223 86847715 1492 logstash02-2-fe357272-3e80-4cd7-961b-6988ecf8a448 /10.18.6.12 logstash02-2nginx_logs nginx_logs 7 86865167 86866847 1680 logstash02-3-49358d2f-5727-4b6d-9332-369a8c2615bf /10.18.6.12 logstash02-3nginx_logs nginx_logs 2 86850292 86853336 3044 logstash01-2-b15d1017-c25c-4d9a-bfb0-ebb99d30cf63 /10.18.6.11 logstash01-2nginx_logs nginx_logs 1 86838836 86841486 2650 logstash01-1-73528037-939d-4458-9bed-6ab1d548ddda /10.18.6.11 logstash01-1nginx_logs nginx_logs 3 86849522 86852188 2666 logstash01-3-af6ecee3-b5bf-448c-b3ba-435b559ea59a /10.18.6.11 logstash01-3nginx_logs nginx_logs 4 86852016 86853950 1934 logstash02-0-26f87af8-6664-4102-8f7e-21d95ec02669 /10.18.6.12 logstash02-0

/usr/local/kafka/bin/kafka-consumer-groups.sh \--bootstrap-server kafka01.koevn.com:29092,kafka02.koevn.com:29092,kafka03.koevn.com:29092 \--describe \--group nginx_logs \--command-config /usr/local/kafka/config/kraft/client.properties

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-IDnginx_logs nginx_logs 0 136003780 136004100 320 logstash01-0-031428c4-9904-4ee4-ba59-94a9bd0069da /10.18.6.11 logstash01-0nginx_logs nginx_logs 5 86875334 86875709 375 logstash02-1-386b12ac-4bfa-46ed-903d-c2e2b02f4ef0 /10.18.6.12 logstash02-1nginx_logs nginx_logs 6 86866641 86866852 211 logstash02-2-fe357272-3e80-4cd7-961b-6988ecf8a448 /10.18.6.12 logstash02-2nginx_logs nginx_logs 7 86885714 86886109 395 logstash02-3-49358d2f-5727-4b6d-9332-369a8c2615bf /10.18.6.12 logstash02-3nginx_logs nginx_logs 2 86872456 86872674 218 logstash01-2-b15d1017-c25c-4d9a-bfb0-ebb99d30cf63 /10.18.6.11 logstash01-2nginx_logs nginx_logs 1 86860351 86860559 208 logstash01-1-73528037-939d-4458-9bed-6ab1d548ddda /10.18.6.11 logstash01-1nginx_logs nginx_logs 3 86871263 86871474 211 logstash01-3-af6ecee3-b5bf-448c-b3ba-435b559ea59a /10.18.6.11 logstash01-3nginx_logs nginx_logs 4 86873024 86873206 182 logstash02-0-26f87af8-6664-4102-8f7e-21d95ec02669 /10.18.6.12 logstash02-0

/usr/local/kafka/bin/kafka-consumer-groups.sh \--bootstrap-server kafka01.koevn.com:29092,kafka02.koevn.com:29092,kafka03.koevn.com:29092 \--describe \--group nginx_logs \--command-config /usr/local/kafka/config/kraft/client.properties

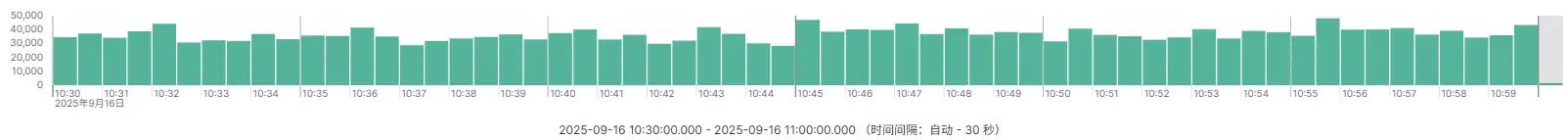

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-IDnginx_logs nginx_logs 0 158980077 158980532 455 logstash01-0-031428c4-9904-4ee4-ba59-94a9bd0069da /10.18.6.11 logstash01-0nginx_logs nginx_logs 5 102157170 102157170 0 logstash02-1-386b12ac-4bfa-46ed-903d-c2e2b02f4ef0 /10.18.6.12 logstash02-1nginx_logs nginx_logs 6 102155301 102155301 0 logstash02-2-fe357272-3e80-4cd7-961b-6988ecf8a448 /10.18.6.12 logstash02-2nginx_logs nginx_logs 7 102176169 102176169 0 logstash02-3-49358d2f-5727-4b6d-9332-369a8c2615bf /10.18.6.12 logstash02-3nginx_logs nginx_logs 2 102157617 102157617 0 logstash01-2-b15d1017-c25c-4d9a-bfb0-ebb99d30cf63 /10.18.6.11 logstash01-2nginx_logs nginx_logs 1 102146858 102146858 0 logstash01-1-73528037-939d-4458-9bed-6ab1d548ddda /10.18.6.11 logstash01-1nginx_logs nginx_logs 3 102161234 102161234 0 logstash01-3-af6ecee3-b5bf-448c-b3ba-435b559ea59a /10.18.6.11 logstash01-3nginx_logs nginx_logs 4 102165309 102165507 198 logstash02-0-26f87af8-6664-4102-8f7e-21d95ec02669 /10.18.6.12 logstash02-0增加logstash节点后,kafka消息队列积压问题慢慢解决了,最后查看日志流状态

数学公式

流程图

sequenceDiagram

participant 客户端

participant 代理对象

participant 代理处理器

participant 目标对象

客户端->>代理对象: 调用方法

代理对象->>代理处理器: 转发代理处理器<br/>Invoke()方法

代理处理器->>目标对象: 判断Method<br/>调用目标对象的方法

目标对象-->>代理处理器: 返回结果

代理处理器-->>代理对象: 返回结果

代理对象-->>客户端: 返回结果

graph LR

C1[Client1<br/>Socket] -->|客户端发出连接| SS[ServerSocket]

C2[Client2<br/>Socket] -->|客户端发出连接| SS

SS -->|服务器接受请求并创建新的Socket| S1[为Client1创建的Socket]

SS -->|服务器接受请求并创建新的Socket| S2[为Client2创建的Socket]

C1 ---|两个Socket间建立专线连接| S1

C2 ---|两个Socket间建立专线连接| S2