几年前部署的一套Kubernetes集群,渐渐需要逐步更新,由于长时间没再记录,对部署方式有些生疏,现对新版本的集群,尝试梳理更新。

一、环境

(1) 集群环境

| 主机名称 | IP | 主机角色 | 部署软件 |

|---|---|---|---|

| k8s-master-01 | 10.88.12.60 | Master | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、haproxy、containerd |

| k8s-master-02 | 10.88.12.61 | Master | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、containerd |

| k8s-master-03 | 10.88.12.62 | Master | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、containerd |

| k8s-worker-01 | 10.88.12.63 | Worker | kubelet、containerd |

| k8s-worker-02 | 10.88.12.64 | Worker | kubelet、containerd |

| k8s-worker-03 | 10.88.12.65 | Worker | kubelet、containerd |

| 10.88.12.100 | VIP | 这个地址通常由keepalived绑定的漂移地址,这次为了简便,直接配置新增网卡上 |

以上集群组件我想有人注意到没有kube-proxy,因为本次集群使用cilium替代kube-proxy,但在部署时还是附带把kube-proxy带上,同时注意每个节点都要有runc程序。

(2) 网络

| 类型 | 网段 | 描述 |

|---|---|---|

| Host | 10.88.12/24 | 物理机或者虚拟机网段 |

| Cluster | 10.90.0.0/16 | 集群Service网段 |

| Pod | 10.100.0.0/16 | 集群Pod网段 |

(3) 软件版本

| 软件 | 版本 |

|---|---|

| cfssl | 1.6.5 |

| containerd | v2.1.0 |

| crictl | 0.1.0 |

| runc | 1.3.0 |

| helm | v3.18.0 |

| os_kernel | 6.1.0-29 |

至于其他系统基础配置,例如安装软件依赖环境、修改系统内核参数、修改系统文件描述符、启用内核某些模块功能(例如ipvs)、集群主机时间同步、禁用selinux、禁用swap分区等等,都可以按自己需求网上搜索设置,这里就不再进行叙述。

二、安装Kubernetes集群Master基本组件

2.1.0 部署containerd

集群所有节点执行

# 解压到/usr/local/bin/ 目录下kevn@k8s-master-01:~$ sudo tar -xvf containerd-2.1.0-linux-amd64.tar.gz -C /usr/local/

# 创建启动服务文件koevn@k8s-master-01:~$ sudo cat > /etc/systemd/system/containerd.service <<EOF[Unit]Description=containerd container runtimeDocumentation=https://containerd.ioAfter=network.target

[Service]ExecStart=/usr/local/bin/containerd --config /etc/containerd/config.tomlDelegate=yesKillMode=processRestart=alwaysLimitNOFILE=1048576LimitNPROC=infinityLimitCORE=infinity

[Install]WantedBy=multi-user.targetEOF

# 创建containerd 配置文件koevn@k8s-master-01:~$ sudo mkdir -pv /etc/containerd/certs.d/harbor.koevn.comkoevn@k8s-master-01:~$ sudo containerd config default | tee /etc/containerd/config.tomltouch /etc/containerd/certs.d/harbor.koevn.com/hosts.toml

# 修改/etc/containerd/config.toml[plugins] [plugins.'io.containerd.cri.v1.images'] snapshotter = 'overlayfs' disable_snapshot_annotations = true discard_unpacked_layers = false max_concurrent_downloads = 3 image_pull_progress_timeout = '5m0s' image_pull_with_sync_fs = false stats_collect_period = 10

[plugins.'io.containerd.cri.v1.images'.pinned_images] sandbox = 'harbor.koevn.com/k8s/pause:3.10' # 这个镜像拉起根据自己定义拉取,我这里是指向私有仓库

[plugins.'io.containerd.cri.v1.images'.registry] config_path = "/etc/containerd/certs.d" # 这里配置镜像仓库相关信息 [plugins.'io.containerd.cri.v1.images'.image_decryption] key_model = 'node'

# 配置/etc/containerd/certs.d/harbor.koevn.com/hosts.tomlserver = "https://harbor.koevn.com"

[host."https://harbor.koevn.com"] capabilities = ["pull", "resolve", "push"] skip_verify = true username = "admin" password = "K123456"

# 重新加载systemd管理koevn@k8s-master-01:~$ sudo systemctl daemon-reload

# containerd服务启动koevn@k8s-master-01:~$ sudo systemctl start containerd.service

# containerd服务状态koevn@k8s-master-01:~$ sudo systemctl status containerd.service

# containerd服务开机自启koevn@k8s-master-01:~$ sudo systemctl enable --now containerd.service2.1.1 配置crictl

# 解压koevn@k8s-master-01:~$ sudo tar xf crictl-v*-linux-amd64.tar.gz -C /usr/sbin/

# 生成配置文件koevn@k8s-master-01:~$ sudo cat > /etc/crictl.yaml <<EOFruntime-endpoint: unix:///run/containerd/containerd.sockimage-endpoint: unix:///run/containerd/containerd.sockEOF

# 查看crictl info2.2.0 部署etcd

此服务部署在Master三个节点

2.2.1 安装etcd环境

# 将已下载的cfssl工具移到/usr/local/bin目录下koevn@k8s-master-01:/tmp$ sudo mv cfssl_1.6.5_linux_amd64 /usr/local/bin/cfsslkoevn@k8s-master-01:/tmp$ sudo mv cfssljson_1.6.5_linux_amd64 /usr/local/bin/cfssljsonkoevn@k8s-master-01:/tmp$ sudo mv cfssl-certinfo_1.6.5_linux_amd64 /usr/local/bin/cfssl-certinfokoevn@k8s-master-01:/tmp$ sudo chmod +x /usr/local/bin/cfss*

# 创建etcd服务目录koevn@k8s-master-01:~$ sudo mkdir -pv /opt/etcd/{bin,cert,config}

# 解压etcd安装文件koevn@k8s-master-01:/tmp$ sudo tar -xvf etcd*.tar.gz && mv etcd-*/etcd /opt/etcd/bin/

# 添加etcd系统环境变量koevn@k8s-master-01:/tmp$ sudo echo "export PATH=/opt/etcd/bin:$PATH" > /etc/profile.d/etcd.sh && sudo source /etc/profile

# 创建证书生成目录koevn@k8s-master-01:/tmp$ sudo mkdir -pv /opt/cert/etcd2.2.2 生成etcd证书

创建ca配置文件

koevn@k8s-master-01:/tmp$ cd /opt/certkoevn@k8s-master-01:/opt/cert$ sudo cat > ca-config.json << EOF{ "signing": { "default": { "expiry": "876000h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "876000h" } } }}EOF创建ca证书签名请求

koevn@k8s-master-01:/opt/cert$ sudo cat > etcd-ca-csr.json << EOF{ "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "etcd", "OU": "Etcd Security" } ], "ca": { "expiry": "876000h" }}EOF生成etcd ca证书

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /opt/cert/etcd创建etcd请求证书

koevn@k8s-master-01:/opt/cert$ sudo cat > etcd-csr.json << EOF{ "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "etcd", "OU": "Etcd Security" } ]}EOF生成etcd证书

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/etcd/etcd-ca.pem \ -ca-key=/opt/cert/etcd/etcd-ca-key.pem \ -config=ca-config.json \ -hostname=127.0.0.1,k8s-master-01,k8s-master-02,k8s-master-03,10.88.12.60,10.88.12.61,10.88.12.62 \ -profile=kubernetes \ etcd-csr.json | cfssljson -bare /opt/cert/etcd将/opt/cert/etcd目录下生成的etcd*.pem证书复制到/opt/etcd/cert/目录。

2.2.3 创建etcd配置文件

koevn@k8s-master-01:~$ sudo cat > /oet/etcd/config/etcd.config.yml << EOFname: 'k8s-master-01' # 注意修改每个节点名称data-dir: /data/etcd/datawal-dir: /data/etcd/walsnapshot-count: 5000heartbeat-interval: 100election-timeout: 1000quota-backend-bytes: 0listen-peer-urls: 'https://10.88.12.60:2380' # 修改每个节点监听的IP地址listen-client-urls: 'https://10.88.12.60:2379,http://127.0.0.1:2379'max-snapshots: 3max-wals: 5cors:initial-advertise-peer-urls: 'https://10.88.12.60:2380'advertise-client-urls: 'https://10.88.12.60:2379'discovery:discovery-fallback: 'proxy'discovery-proxy:discovery-srv:initial-cluster: 'k8s-master-01=https://10.88.12.60:2380,k8s-master-02=https://10.88.12.61:2380,k8s-master-03=https://10.88.12.62:2380'initial-cluster-token: 'etcd-k8s-cluster'initial-cluster-state: 'new'strict-reconfig-check: falseenable-v2: trueenable-pprof: trueproxy: 'off'proxy-failure-wait: 5000proxy-refresh-interval: 30000proxy-dial-timeout: 1000proxy-write-timeout: 5000proxy-read-timeout: 0client-transport-security: cert-file: '/opt/etcd/cert/etcd.pem' key-file: '/opt/etcd/cert/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/opt/etcd/cert/etcd-ca.pem' auto-tls: truepeer-transport-security: cert-file: '/opt/etcd/cert/etcd.pem' key-file: '/opt/etcd/cert/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/opt/etcd/cert/etcd-ca.pem' auto-tls: truedebug: falselog-package-levels:log-outputs: [default]force-new-cluster: falseEOF查看etcd服务目录结构

koevn@k8s-master-01:~$ sudo tree /opt/etcd//opt/etcd/├── bin│ ├── etcd│ ├── etcdctl│ └── etcdutl├── cert│ ├── etcd-ca.pem│ ├── etcd-key.pem│ └── etcd.pem└── config └── etcd.config.yml

4 directories, 7 files然后把etcd打包分发到其他Master节点上

koevn@k8s-master-01:~$ sudo tar -czvf /tmp/etcd.tar.gz /opt/etcd2.2.4 每个etcd节点配置启动服务

koevn@k8s-master-01:~$ sudo cat /etc/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServiceDocumentation=https://coreos.com/etcd/docs/latest/After=network.target

[Service]Type=notifyExecStart=/opt/etcd/bin/etcd --config-file=/opt/etcd/config/etcd.config.ymlRestart=on-failureRestartSec=10LimitNOFILE=65536

[Install]WantedBy=multi-user.targetAlias=etcd3.serviceEOF加载系统服务systemctl daemon-reload

启动etcd服务systemctl start etcd.service

查看etcd服务状态systemctl status etcd.service

添加开机自启systemctl enable --now etcd.service

2.2.5 查看etcd状态

koevn@k8s-master-01:~$ sudo export ETCDCTL_API=3root@k8s-master-01:~# etcdctl --endpoints="10.88.12.60:2379,10.88.12.61:2379,10.88.12.62:2379" \> --cacert=/opt/etcd/cert/etcd-ca.pem \> --cert=/opt/etcd/cert/etcd.pem \> --key=/opt/etcd/cert/etcd-key.pem \> endpoint status \> --write-out=table+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| 10.88.12.60:2379 | c1f013862d84cb12 | 3.5.21 | 35 MB | false | false | 158 | 8434769 | 8434769 | || 10.88.12.61:2379 | cea2c8779e4914d0 | 3.5.21 | 36 MB | false | false | 158 | 8434769 | 8434769 | || 10.88.12.62:2379 | df3b2276b87896db | 3.5.21 | 33 MB | true | false | 158 | 8434769 | 8434769 | |+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+2.3.0 生成k8s相关证书

创建k8s证书存放目录

koevn@k8s-master-01:~$ sudo mkdir -pv /opt/cert/kubernetes/pkikoevn@k8s-master-01:~$ sudo mkdir -pv /opt/kubernetes/cfg # 集群kubeconfig文件目录koevn@k8s-master-01:~$ cd /opt/cert2.3.1 生成k8s ca证书

koevn@k8s-master-01:/opt/cert$ sudo cat > ca-csr.json << EOF{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "Kubernetes", "OU": "Kubernetes-manual" } ], "ca": { "expiry": "876000h" }}EOF生成ca证书

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert -initca ca-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/ca2.3.2 生成apiserver 证书

koevn@k8s-master-01:/opt/cert$ sudo cat > apiserver-csr.json << EOF{ "CN": "kube-apiserver", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "Kubernetes", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-ca=/opt/cert/kubernetes/pki/ca.pem \-ca-key=/opt/cert/kubernetes/pki/ca-key.pem \-config=ca-config.json \-hostname=10.90.0.1,10.88.12.100,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,k8s.koevn.com,10.88.12.60,10.88.12.61,10.88.12.62,10.88.12.63,10.88.12.64,10.88.12.65,10.88.12.66,10.88.12.67,10.88.12.68 \-profile=kubernetes apiserver-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/apiserver2.3.3 生成apiserver聚合证书

koevn@k8s-master-01:/opt/cert$ sudo cat > front-proxy-ca-csr.json << EOF{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "ca": { "expiry": "876000h" }}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-initca front-proxy-ca-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/front-proxy-ca

koevn@k8s-master-01:/opt/cert$ sudo cat > front-proxy-client-csr.json << EOF{ "CN": "front-proxy-client", "key": { "algo": "rsa", "size": 2048 }}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-ca=/opt/cert/kubernetes/pki/front-proxy-ca.pem \-ca-key=/opt/cert/kubernetes/pki/front-proxy-ca-key.pem \-config=ca-config.json \-profile=kubernetes \front-proxy-client-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/front-proxy-client2.3.4 生成controller-manage证书

koevn@k8s-master-01:/opt/cert$ sudo cat > manager-csr.json << EOF{ "CN": "system:kube-controller-manager", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-controller-manager", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-ca=/opt/cert/kubernetes/pki/front-proxy-ca.pem \-ca-key=/opt/cert/kubernetes/pki/front-proxy-ca-key.pem \-config=ca-config.json \-profile=kubernetes \manager-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/controller-manager

设置集群项```bashkoevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig⚠️ 注意 配置集群项

--server配置一定要指向keepalived高可用VIP,而端口9443是haproxy监听的端口

设置环境项上下文

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context system:kube-controller-manager@kubernetes \ --cluster=kubernetes \ --user=system:kube-controller-manager \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig设置一个用户项

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials system:kube-controller-manager \ --client-certificate=/opt/cert/kubernetes/pki/controller-manager.pem \ --client-key=/opt/cert/kubernetes/pki/controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig设置默认环境

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context system:kube-controller-manager@kubernetes \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig2.3.5 生成kube-scheduler证书

koevn@k8s-master-01:/opt/cert$ sudo cat > scheduler-csr.json << EOF{ "CN": "system:kube-scheduler", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-scheduler", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/kubernetes/pki/ca.pem \ -ca-key=/opt/cert/kubernetes/pki/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ scheduler-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/scheduler

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials system:kube-scheduler \ --client-certificate=/opt/cert/kubernetes/pki/scheduler.pem \ --client-key=/opt/cert/kubernetes/pki/scheduler-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context system:kube-scheduler@kubernetes \ --cluster=kubernetes \ --user=system:kube-scheduler \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context system:kube-scheduler@kubernetes \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig2.3.6 生成admin证书

koevn@k8s-master-01:/opt/cert$ sudo cat > admin-csr.json << EOF{ "CN": "admin", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:masters", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/kubernetes/pki/ca.pem \ -ca-key=/opt/cert/kubernetes/pki/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ admin-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/admin

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials kubernetes-admin \ --client-certificate=/opt/cert/kubernetes/pki//admin.pem \ --client-key=/opt/cert/kubernetes/pki//admin-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context kubernetes-admin@kubernetes \ --cluster=kubernetes \ --user=kubernetes-admin \ --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context kubernetes-admin@kubernetes \--kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig2.3.7 生成kube-proxy证书(可选)

koevn@k8s-master-01:/opt/cert$ sudo cat > kube-proxy-csr.json << EOF{ "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-proxy", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/kubernetes/pki/ca.pem \ -ca-key=/opt/cert/kubernetes/pki/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ kube-proxy-csr.json | cfssljson -bare /opt/kubernetes/cfg/kube-proxy

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials kube-proxy \ --client-certificate=/opt/cert/kubernetes/pki/kube-proxy.pem \ --client-key=/opt/cert/kubernetes/pki/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context kube-proxy@kubernetes \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context kube-proxy@kubernetes \--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig2.3.8 创建ServiceAccount Key

koevn@k8s-master-01:/opt/cert$ sudo openssl genrsa -out /opt/cert/kubernetes/pki/sa.key 2048koevn@k8s-master-01:/opt/cert$ sudo openssl rsa -in /opt/cert/kubernetes/pki/sa.key \-pubout -out /opt/cert/kubernetes/pki/sa.pub2.3.9 查看k8s组件证书数量

koevn@k8s-master-01:~$ ls /opt/cert/kubernetes/pki-rw-r--r-- 1 root root 1025 May 20 17:11 admin.csr-rw------- 1 root root 1679 May 20 17:11 admin-key.pem-rw-r--r-- 1 root root 1444 May 20 17:11 admin.pem-rw-r--r-- 1 root root 1383 May 20 16:25 apiserver.csr-rw------- 1 root root 1675 May 20 16:25 apiserver-key.pem-rw-r--r-- 1 root root 1777 May 20 16:25 apiserver.pem-rw-r--r-- 1 root root 1070 May 20 11:58 ca.csr-rw------- 1 root root 1679 May 20 11:58 ca-key.pem-rw-r--r-- 1 root root 1363 May 20 11:58 ca.pem-rw-r--r-- 1 root root 1082 May 20 16:38 controller-manager.csr-rw------- 1 root root 1679 May 20 16:38 controller-manager-key.pem-rw-r--r-- 1 root root 1501 May 20 16:38 controller-manager.pem-rw-r--r-- 1 root root 940 May 20 16:26 front-proxy-ca.csr-rw------- 1 root root 1675 May 20 16:26 front-proxy-ca-key.pem-rw-r--r-- 1 root root 1094 May 20 16:26 front-proxy-ca.pem-rw-r--r-- 1 root root 903 May 20 16:30 front-proxy-client.csr-rw------- 1 root root 1679 May 20 16:30 front-proxy-client-key.pem-rw-r--r-- 1 root root 1188 May 20 16:30 front-proxy-client.pem-rw-r--r-- 1 root root 1045 May 20 18:30 kube-proxy.csr-rw------- 1 root root 1679 May 20 18:30 kube-proxy-key.pem-rw-r--r-- 1 root root 1464 May 20 18:30 kube-proxy.pem-rw------- 1 root root 1704 May 21 09:21 sa.key-rw-r--r-- 1 root root 451 May 21 09:21 sa.pub-rw-r--r-- 1 root root 1058 May 20 17:00 scheduler.csr-rw------- 1 root root 1679 May 20 17:00 scheduler-key.pem-rw-r--r-- 1 root root 1476 May 20 17:00 scheduler.pem

koevn@k8s-master-01:~$ ls /opt/cert/kubernetes/pki/ | wc -l # 查看总数262.3.10 安装kubernetes组件与证书分发(所有Master节点)

koevn@k8s-master-01:~$ sudo mkdir -pv /opt/kubernetes/{bin,etc,cert,cfg}koevn@k8s-master-01:~$ sudo tree /opt/kubernetes # 查看文件层级/opt/kubernetes├── bin│ ├── kube-apiserver│ ├── kube-controller-manager│ ├── kubectl│ ├── kubelet│ ├── kube-proxy│ └── kube-scheduler├── cert│ ├── admin-key.pem│ ├── admin.pem│ ├── apiserver-key.pem│ ├── apiserver.pem│ ├── ca-key.pem│ ├── ca.pem│ ├── controller-manager-key.pem│ ├── controller-manager.pem│ ├── front-proxy-ca.pem│ ├── front-proxy-client-key.pem│ ├── front-proxy-client.pem│ ├── kube-proxy-key.pem│ ├── kube-proxy.pem│ ├── sa.key│ ├── sa.pub│ ├── scheduler-key.pem│ └── scheduler.pem├── cfg│ ├── admin.kubeconfig│ ├── bootstrap-kubelet.kubeconfig│ ├── bootstrap.secret.yaml│ ├── controller-manager.kubeconfig│ ├── kubelet.kubeconfig│ ├── kube-proxy.kubeconfig│ └── scheduler.kubeconfig├── etc│ ├── kubelet-conf.yml│ └── kube-proxy.yaml└── manifests

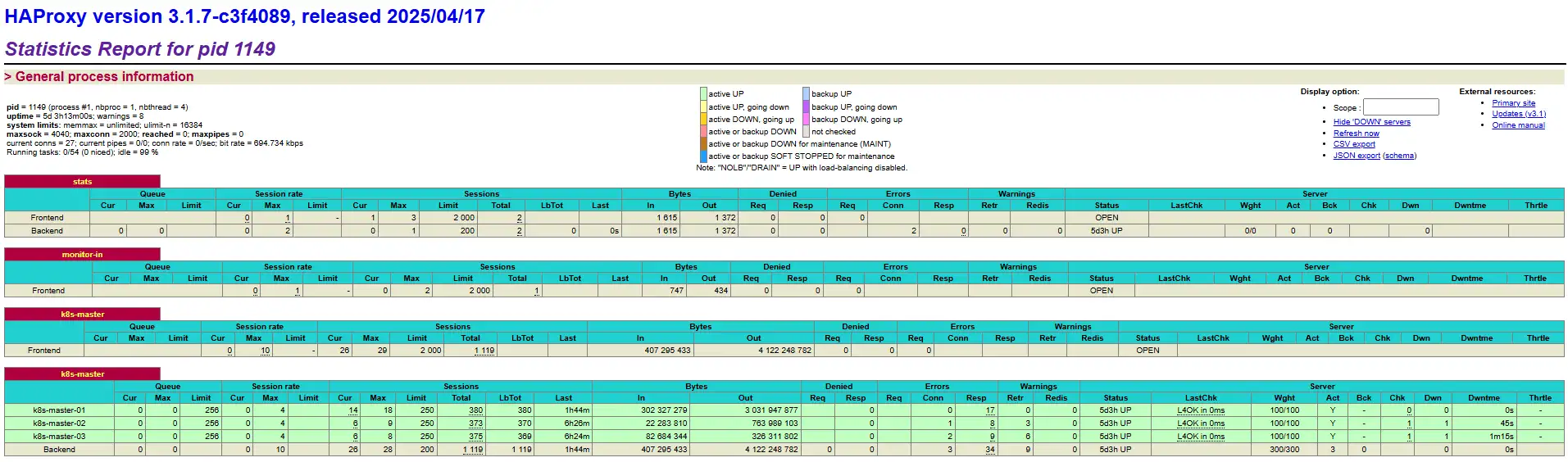

6 directories, 32 files2.4.0 部署Haproxy负载均衡

⚠️ 注意 这一步配置apiserver高可用本是Haproxy加Keepalived组合,这步为了省略,我直接在Master-01节点加一张网卡配个假VIP地址,生产环境则最好用Haproxy加Keepalived组合,提高冗余。

2.4.1 安装Haproxy

koevn@k8s-master-01:~$ sudo apt install haproxy2.4.2 修改haproxy配置文件

koevn@k8s-master-01:~$ sudo cat >/etc/haproxy/haproxy.cfg << EOFglobal maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s

defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s

listen stats mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth admin:K123456

frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor

frontend k8s-master bind 0.0.0.0:9443 bind 127.0.0.1:9443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master

backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master-01 10.88.12.60:6443 check server k8s-master-02 10.88.12.61:6443 check server k8s-master-03 10.88.12.62:6443 checkEOF启动服务

koevn@k8s-master-01:~$ sudo systemctl daemon-reloadkoevn@k8s-master-01:~$ sudo systemctl enable --now haproxy.service通过浏览器访问http://10.88.12.100:9999/haproxy-status查看haproxy状态

2.5.0 配置apiserver 服务(所有Master节点)`

配置apiserver启动服务

koevn@k8s-master-01:~$ sudo cat /etc/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-apiserver \ --v=2 \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=6443 \ --advertise-address=10.88.12.60 \ # 注意更改每个Master节点IP --service-cluster-ip-range=10.90.0.0/16 \ # 集群cluster网段根据使用配置 --service-node-port-range=30000-32767 \ --etcd-servers=https://10.88.12.60:2379,https://10.88.12.61:2379,https://10.88.12.62:2379 \ --etcd-cafile=/opt/etcd/cert/etcd-ca.pem \ --etcd-certfile=/opt/etcd/cert/etcd.pem \ --etcd-keyfile=/opt/etcd/cert/etcd-key.pem \ --client-ca-file=/opt/kubernetes/cert/ca.pem \ --tls-cert-file=/opt/kubernetes/cert/apiserver.pem \ --tls-private-key-file=/opt/kubernetes/cert/apiserver-key.pem \ --kubelet-client-certificate=/opt/kubernetes/cert/apiserver.pem \ --kubelet-client-key=/opt/kubernetes/cert/apiserver-key.pem \ --service-account-key-file=/opt/kubernetes/cert/sa.pub \ --service-account-signing-key-file=/opt/kubernetes/cert/sa.key \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --requestheader-client-ca-file=/opt/kubernetes/cert/front-proxy-ca.pem \ --proxy-client-cert-file=/opt/kubernetes/cert/front-proxy-client.pem \ --proxy-client-key-file=/opt/kubernetes/cert/front-proxy-client-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --enable-aggregator-routing=trueRestart=on-failureRestartSec=10sLimitNOFILE=65535

[Install]WantedBy=multi-user.targetEOF重新加载systemd管理

koevn@k8s-master-01:~$ sudo systemctl daemon-reload启动kube-apiserver

koevn@k8s-master-01:~$ sudo systemctl enable --now kube-apiserver.service查看kube-apiserver服务状态

koevn@k8s-master-01:~$ sudo systemctl status kube-apiserver.service2.6.0 配置kube-controller-manager service(所有Master节点)

配置kube-controller-manager service启动服务

koevn@k8s-master-01:~$ sudo cat /etc/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-controller-manager \ --v=2 \ --root-ca-file=/opt/kubernetes/cert/ca.pem \ --cluster-signing-cert-file=/opt/kubernetes/cert/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/cert/ca-key.pem \ --service-account-private-key-file=/opt/kubernetes/cert/sa.key \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig \ --leader-elect=true \ --use-service-account-credentials=true \ --node-monitor-grace-period=40s \ --node-monitor-period=5s \ --controllers=*,bootstrapsigner,tokencleaner \ --allocate-node-cidrs=true \ --service-cluster-ip-range=10.90.0.0/16 \ # 集群cluster网段根据使用更改 --cluster-cidr=10.100.0.0/16 \ # 集群pod网段根据使用更改 --node-cidr-mask-size-ipv4=24 \ # 网段子网掩码设为24 --requestheader-client-ca-file=/opt/kubernetes/cert/front-proxy-ca.pem

Restart=alwaysRestartSec=10s

[Install]WantedBy=multi-user.targetEOF重新加载systemd管理

koevn@k8s-master-01:~$ sudo systemctl daemon-reload启动kube-apiserver

koevn@k8s-master-01:~$ sudo systemctl enable --now kube-controller-manager.service查看kube-apiserver服务状态

koevn@k8s-master-01:~$ sudo systemctl status kube-controller-manager.service2.7.0 配置kube-scheduler service(所有Master节点)

配置kube-scheduler service 启动服务

koevn@k8s-master-01:~$ sudo cat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-scheduler \ --v=2 \ --bind-address=0.0.0.0 \ --leader-elect=true \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

Restart=alwaysRestartSec=10s

[Install]WantedBy=multi-user.targetEOF重新加载systemd管理

koevn@k8s-master-01:~$ sudo systemctl daemon-reload启动kube-apiserver

koevn@k8s-master-01:~$ sudo systemctl enable --now kube-scheduler.service查看kube-apiserver服务状态

koevn@k8s-master-01:~$ sudo systemctl status kube-scheduler.service2.8.0 TLS Bootstrapping配置

创建集群配置项

koevn@k8s-master-01:~$ sudo kubectl config set-cluster kubernetes \--certificate-authority=/opt/cert/kubernetes/pki/ca.pem \--embed-certs=true \--server=https://10.88.12.100:9443 \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig创建token

koevn@k8s-master-01:~$ sudo echo "$(head -c 6 /dev/urandom | md5sum | head -c 6)"."$(head -c 16 /dev/urandom | md5sum | head -c 16)"79b841.0677456fb3b47289设置凭证信息

koevn@k8s-master-01:~$ sudo kubectl config set-credentials tls-bootstrap-token-user \--token=79b841.0677456fb3b47289 \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig设置上下文信息

koevn@k8s-master-01:~$ sudo kubectl config set-context tls-bootstrap-token-user@kubernetes \--cluster=kubernetes \--user=tls-bootstrap-token-user \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig

koevn@k8s-master-01:~$ sudo kubectl config use-context tls-bootstrap-token-user@kubernetes \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig配置当前用户管理集群

mkdir -pv /home/koevn/.kube && cp /opt/kubernetes/cfg/admin.kubeconfig /home/koevn/.kube/config查看集群状态

koevn@k8s-master-01:~$ sudo kubectl get cs # 查看 Kubernetes 控制平面核心组件的健康状态Warning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORetcd-0 Healthy okcontroller-manager Healthy okscheduler Healthy ok应用bootstrap-token

koevn@k8s-master-01:~$ sudo cat > bootstrap.secret.yaml << EOFapiVersion: v1kind: Secretmetadata: name: bootstrap-token-79b841 namespace: kube-systemtype: bootstrap.kubernetes.io/tokenstringData: description: "The default bootstrap token generated by 'kubelet '." token-id: 79b841 token-secret: 0677456fb3b47289 usage-bootstrap-authentication: "true" usage-bootstrap-signing: "true" auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: kubelet-bootstraproleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node-bootstrappersubjects:- apiGroup: rbac.authorization.k8s.io kind: Group name: system:bootstrappers:default-node-token---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: node-autoapprove-bootstraproleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:nodeclientsubjects:- apiGroup: rbac.authorization.k8s.io kind: Group name: system:bootstrappers:default-node-token---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: node-autoapprove-certificate-rotationroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclientsubjects:- apiGroup: rbac.authorization.k8s.io kind: Group name: system:nodes---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:kube-apiserver-to-kubeletrules: - apiGroups: - "" resources: - nodes/proxy - nodes/stats - nodes/log - nodes/spec - nodes/metrics verbs: - "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: system:kube-apiserver namespace: ""roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:kube-apiserver-to-kubeletsubjects: - apiGroup: rbac.authorization.k8s.io kind: User name: kube-apiserver创建bootstrap.secret配置到集群

kubectl create -f bootstrap.secret.yaml三、安装Kubernetes集群Worker基本组件

3.1.0 所需安装目录结构

koevn@k8s-worker-01:~$ sudo mkdir -pv /opt/kubernetes/{bin,cert,cfg,etc}koevn@k8s-worker-01:~$ sudo tree /opt/kubernetes/ # 查看目录结构/opt/kubernetes/├── bin│ ├── kubectl│ ├── kubelet│ └── kube-proxy├── cert # 证书文件从master节点copy过来│ ├── ca.pem│ ├── front-proxy-ca.pem│ ├── kube-proxy-key.pem│ └── kube-proxy.pem├── cfg # kubeconfig文件从master节点copy过来│ ├── bootstrap-kubelet.kubeconfig│ ├── kubelet.kubeconfig│ └── kube-proxy.kubeconfig├── etc│ ├── kubelet-conf.yml│ └── kube-proxy.yaml└── manifests

6 directories, 12 filesKubernetes Worker节点只需安装Containerd和kubelet组件,kube-proxy虽不是必要,但还是顺带安装下,按自己需求部署就好。

3.2.0 部署kubelet服务

这个组件是需要部署到集群所有节点,按照这个部署到其他节点。 添加kubelet的service文件

koevn@k8s-worker-01:~$ sudo cat > /usr/lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletDocumentation=https://github.com/kubernetes/kubernetesAfter=network-online.target firewalld.service containerd.serviceWants=network-online.targetRequires=containerd.service

[Service]ExecStart=/opt/kubernetes/bin/kubelet \ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --config=/opt/kubernetes/etc/kubelet-conf.yml \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --node-labels=node.kubernetes.io/node=

[Install]WantedBy=multi-user.targetEOF创建并编辑kubelet配置文件

koevn@k8s-worker-01:~$ sudo cat > /etc/kubernetes/kubelet-conf.yml <<EOFapiVersion: kubelet.config.k8s.io/v1beta1kind: KubeletConfigurationaddress: 0.0.0.0port: 10250readOnlyPort: 10255authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/cert/ca.pemauthorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30scgroupDriver: systemdcgroupsPerQOS: trueclusterDNS:- 10.90.0.10clusterDomain: cluster.localcontainerLogMaxFiles: 5containerLogMaxSize: 10MicontentType: application/vnd.kubernetes.protobufcpuCFSQuota: truecpuManagerPolicy: nonecpuManagerReconcilePeriod: 10senableControllerAttachDetach: trueenableDebuggingHandlers: trueenforceNodeAllocatable:- podseventBurst: 10eventRecordQPS: 5evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5%evictionPressureTransitionPeriod: 5m0sfailSwapOn: truefileCheckFrequency: 20shairpinMode: promiscuous-bridgehealthzBindAddress: 127.0.0.1healthzPort: 10248httpCheckFrequency: 20simageGCHighThresholdPercent: 85imageGCLowThresholdPercent: 80imageMinimumGCAge: 2m0siptablesDropBit: 15iptablesMasqueradeBit: 14kubeAPIBurst: 10kubeAPIQPS: 5makeIPTablesUtilChains: truemaxOpenFiles: 1000000maxPods: 110nodeStatusUpdateFrequency: 10soomScoreAdj: -999podPidsLimit: -1registryBurst: 10registryPullQPS: 5resolvConf: /etc/resolv.confrotateCertificates: trueruntimeRequestTimeout: 2m0sserializeImagePulls: truestaticPodPath: /opt/kubernetes/manifestsstreamingConnectionIdleTimeout: 4h0m0ssyncFrequency: 1m0svolumeStatsAggPeriod: 1m0s启动kubelet

koevn@k8s-worker-01:~$ sudo systemctl daemon-reload # 重载systemd管理单元koevn@k8s-worker-01:~$ sudo systemctl enable --now kubelet.service # 启用并立即启动kubelet.service单元koevn@k8s-worker-01:~$ sudo systemctl status kubelet.service # 查看kubelet.service服务当前状态3.3.0 查看集群节点状态

koevn@k8s-master-01:~$ sudo kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master-01 NotReady <none> 50s v1.33.0k8s-master-02 NotReady <none> 47s v1.33.0k8s-master-03 NotReady <none> 40s v1.33.0k8s-worker-01 NotReady <none> 35s v1.33.0k8s-worker-02 NotReady <none> 28s v1.33.0k8s-worker-03 NotReady <none> 11s v1.33.0由于此时Kubernetes集群未安装CNI网络插件,节点STATUS处于

NotReady状态是正常的,只有CNI网络插件正常运行无报错,状态就是Ready。

3.4.0 查看集群节点运行的Runtime版本信息

koevn@k8s-master-01:~$ sudo kubectl describe node | grep Runtime Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.03.5.0 部署kube-proxy服务(可选)

这个组件是需要部署到集群所有节点,按照这个部署到其他节点。 添加kube-proxy的service文件

koevn@k8s-worker-01:~$ sudo cat > /usr/lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes Kube ProxyDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-proxy \ --config=/opt/kubernetes/etc/kube-proxy.yaml \ --cluster-cidr=10.100.0.0/16 \ --v=2Restart=alwaysRestartSec=10s

[Install]WantedBy=multi-user.targetEOF创建并编辑kube-proxy配置文件

koevn@k8s-worker-01:~$ sudo cat > /etc/kubernetes/kube-proxy.yaml << EOFapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0clientConnection: acceptContentTypes: "" burst: 10 contentType: application/vnd.kubernetes.protobuf kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig qps: 5clusterCIDR: 10.100.0.0/16configSyncPeriod: 15m0sconntrack: max: null maxPerCore: 32768 min: 131072 tcpCloseWaitTimeout: 1h0m0s tcpEstablishedTimeout: 24h0m0senableProfiling: falsehealthzBindAddress: 0.0.0.0:10256hostnameOverride: ""iptables: masqueradeAll: false masqueradeBit: 14 minSyncPeriod: 0s syncPeriod: 30sipvs: masqueradeAll: true minSyncPeriod: 5s scheduler: "rr" syncPeriod: 30skind: KubeProxyConfigurationmetricsBindAddress: 127.0.0.1:10249mode: "ipvs"nodePortAddresses: nulloomScoreAdj: -999portRange: ""udpIdleTimeout: 250msEOF启动kubelet

koevn@k8s-worker-01:~$ sudo systemctl daemon-reload # 重载systemd管理单元koevn@k8s-worker-01:~$ sudo systemctl enable --now kube-proxy.service # 启用并立即启动kubelet.service单元koevn@k8s-worker-01:~$ sudo systemctl status kube-proxy.service # 查看kubelet.service服务当前状态四、安装集群网络插件

⚠️ 注意 此次安装的网络插件是cilium,有此插件接管Kubernetes集群网络,那么kube-proxy服务就必须

systemctl stop kube-proxy.service关闭,避免对cilium造成干扰,部署cilium在Master任意节点上操作。

4.1.0 安装helm

koevn@k8s-master-01:~$ sudo curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3koevn@k8s-master-01:~$ sudo chmod 700 get_helm.shkoevn@k8s-master-01:~$ sudo ./get_helm.shkoevn@k8s-master-01:~$ sudo tar xvf helm-*-linux-amd64.tar.gzkoevn@k8s-master-01:~$ sudo cp linux-amd64/helm /usr/local/bin/4.2.0 安装cilium

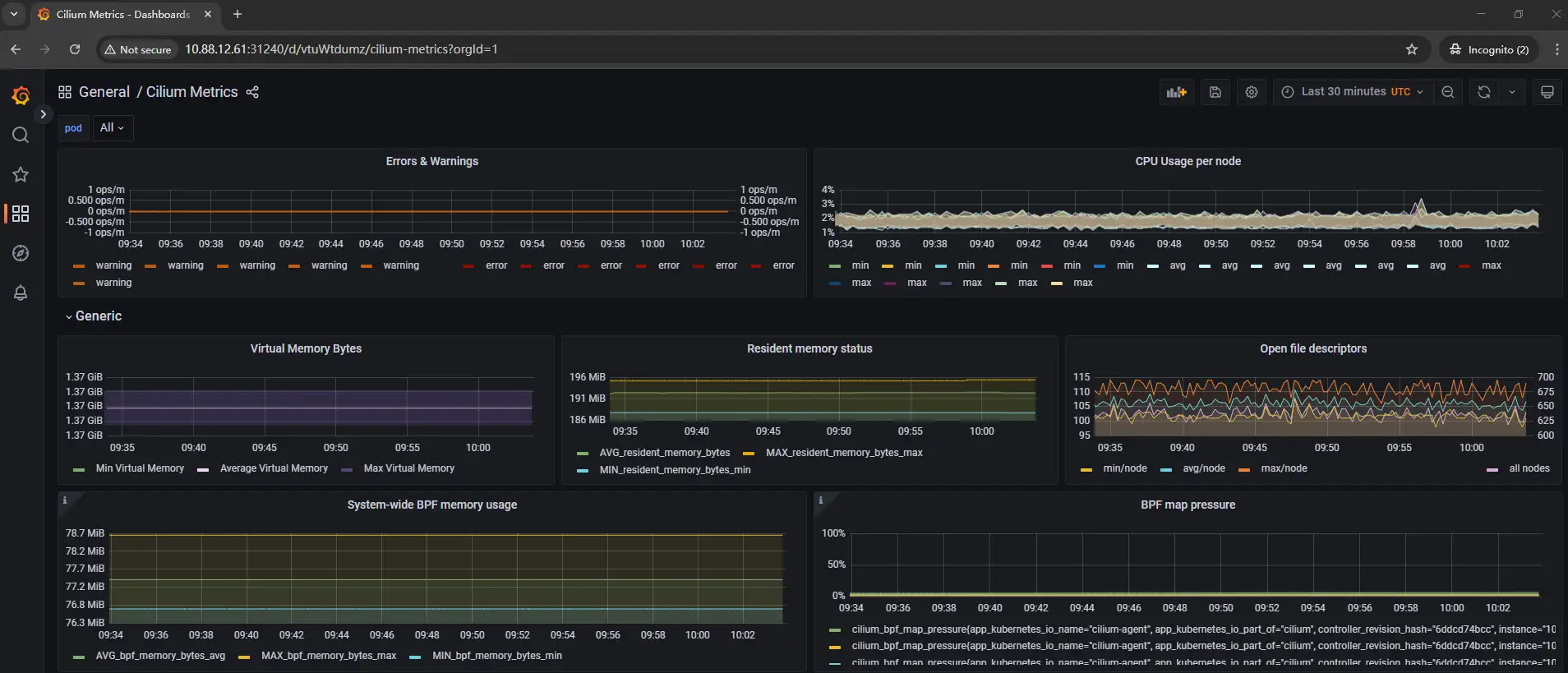

koevn@k8s-master-01:~$ sudo helm repo add cilium https://helm.cilium.io # 添加源koevn@k8s-master-01:~$ sudo helm search repo cilium/cilium --versions # 搜索支持的版本koevn@k8s-master-01:~$ sudo helm pull cilium/cilium # 拉取cilium安装包koevn@k8s-master-01:~$ sudo tar xvf cilium-*.tgzkoevn@k8s-master-01:~$ sudo cd cilium/编辑修改cilium目录下values.yaml文件以下内容

# ------ 其他配置省略 ------#

kubeProxyReplacement: "true" # 取消注释,修改为truek8sServiceHost: "kubernetes.default.svc.cluster.local" # 这个domain要根据自己生成apiserver证书时绑定k8sServicePort: "9443" # apiserver 端口ipv4NativeRoutingCIDR: "10.100.0.0/16" # 设定Pod IPv4 网段hubble: # 启用特定的 Hubble 指标 metrics: enabled: - dns - drop - tcp - flow - icmp - httpV2

# ------ 其他配置省略 ------#⚠️ 注意 这里其他所有配置文件里的

repository配置参数,一般网络环境不好的,建议部署harbor私有仓库,避免集群containerd组件拉取镜像失败,导致Pod无法正常运行

其他功能则按照配置参数层级去启用

- 启用 Hubble Relay 聚合服务

hubble.relay.enabled=true - 启用 Hubble UI 前端(可视化页面)

hubble.ui.enabled=true - 启用 Cilium 的 Prometheus 指标输出

prometheus.enabled=true - 启用 Operator 的 Prometheus 指标输出

operator.prometheus.enabled=true - 启用 Hubble

hubble.enabled=true

上面这段是根据

values.yaml配置文件层级简化表示,也可以通过cilium-cli命令添加--set hubble.relay.enabled=true去启用指定功能

安装cilium

koevn@k8s-master-01:~$ sudo helm upgrade cilium ./cilium \ --namespace kube-system \ --create-namespace \ -f cilium/values.yaml查看状态

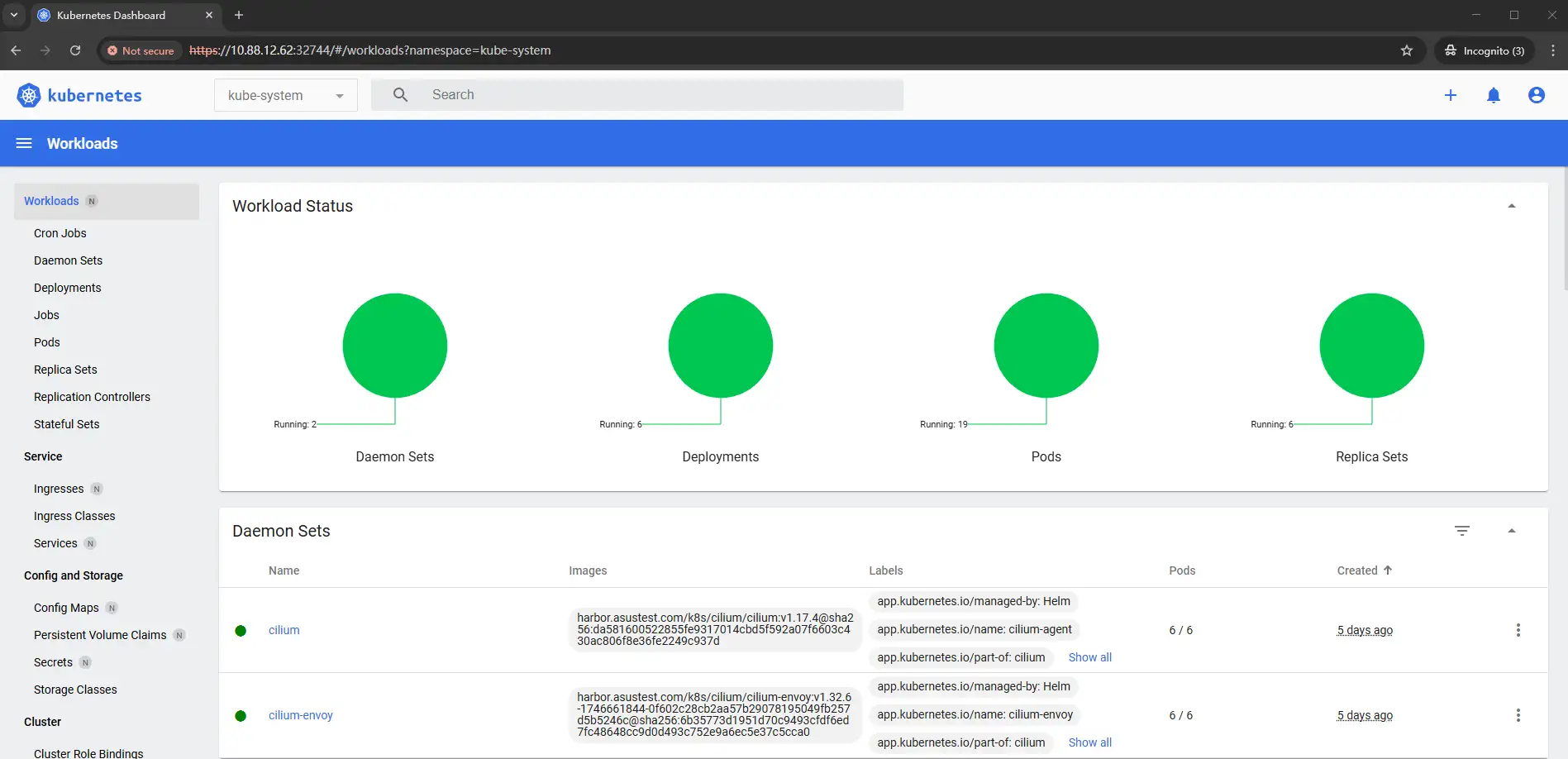

koevn@k8s-master-01:~$ sudo kubectl get pod -A | grep cilcilium-monitoring grafana-679cd8bff-w9xm9 1/1 Running 0 5dcilium-monitoring prometheus-87d4f66f7-6lmrz 1/1 Running 0 5dkube-system cilium-envoy-4sjq5 1/1 Running 0 5dkube-system cilium-envoy-64694 1/1 Running 0 5dkube-system cilium-envoy-fzjjw 1/1 Running 0 5dkube-system cilium-envoy-twtw6 1/1 Running 0 5dkube-system cilium-envoy-vwstr 1/1 Running 0 5dkube-system cilium-envoy-whck6 1/1 Running 0 5dkube-system cilium-fkjcm 1/1 Running 0 5dkube-system cilium-h75vq 1/1 Running 0 5dkube-system cilium-hcx4q 1/1 Running 0 5dkube-system cilium-jz44w 1/1 Running 0 5dkube-system cilium-operator-58d8755c44-hnwmd 1/1 Running 57 (84m ago) 5dkube-system cilium-operator-58d8755c44-xmg9f 1/1 Running 54 (82m ago) 5dkube-system cilium-qx5mn 1/1 Running 0 5dkube-system cilium-wqmzc 1/1 Running 0 5d五、部署监控面板

5.1.0 创建监控服务

koevn@k8s-master-01:~$ sudo wget https://raw.githubusercontent.com/cilium/cilium/1.12.1/examples/kubernetes/addons/prometheus/monitoring-example.yamlkoevn@k8s-master-01:~$ sudo kubectl apply -f monitoring-example.yaml5.2.0 修改集群Services Type为NodePort

查看当前Services

koevn@k8s-master-01:~$ sudo kubectl get svc -ANAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEcilium-monitoring grafana ClusterIP 10.90.138.192 <none> 3000/TCP 1dcilium-monitoring prometheus ClusterIP 10.90.145.89 <none> 9090/TCP 1ddefault kubernetes ClusterIP 10.90.0.1 <none> 443/TCP 1dkube-system cilium-envoy ClusterIP None <none> 9964/TCP 1dkube-system coredns ClusterIP 10.90.0.10 <none> 53/UDP 1dkube-system hubble-metrics ClusterIP None <none> 9965/TCP 1dkube-system hubble-peer ClusterIP 10.90.63.177 <none> 443/TCP 1dkube-system hubble-relay ClusterIP 10.90.157.4 <none> 80/TCP 1dkube-system hubble-ui ClusterIP 10.90.13.110 <none> 80/TCP 1dkube-system metrics-server ClusterIP 10.90.61.226 <none> 443/TCP 1d修改Services Type

koevn@k8s-master-01:~$ sudo kubectl edit svc -n cilium-monitoring grafanakoevn@k8s-master-01:~$ sudo kubectl edit svc -n cilium-monitoring prometheuskoevn@k8s-master-01:~$ sudo kubectl edit svc -n kube-system hubble-ui执行以上任意一条命令,显示

yaml格式文件内容,将底下的type: ClusterIP改成type: NodePort即可,取消则改回type: ClusterIP再次查看当前Services

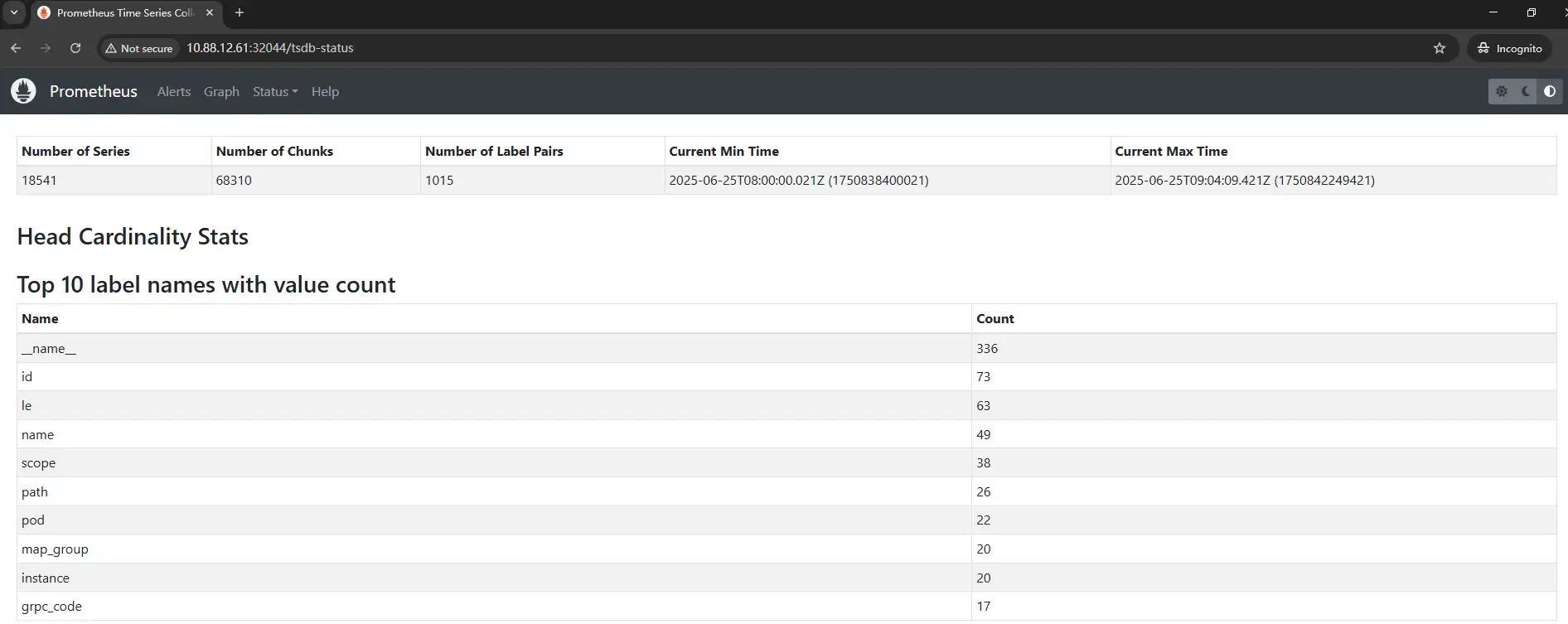

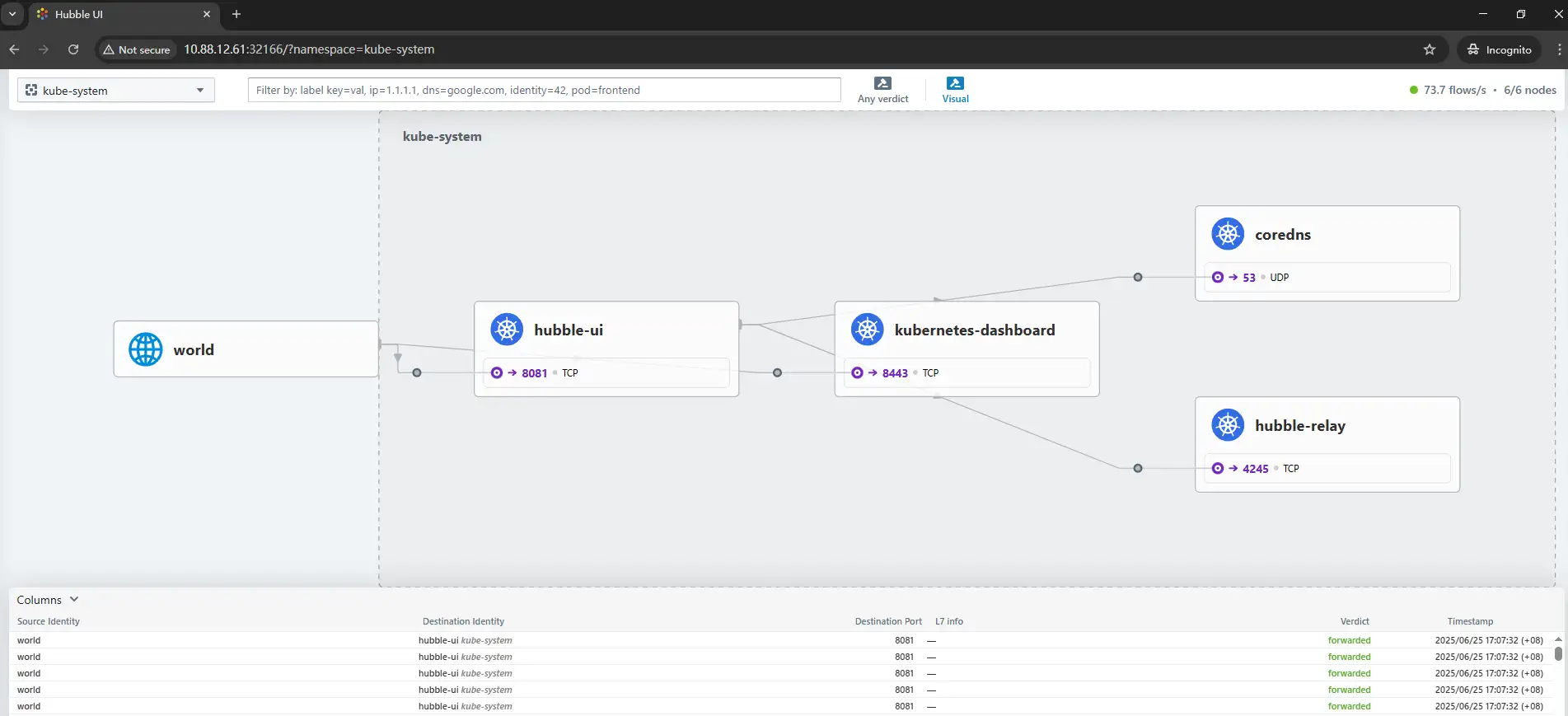

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEcilium-monitoring grafana NodePort 10.90.138.192 <none> 3000:31240/TCP 1dcilium-monitoring prometheus NodePort 10.90.145.89 <none> 9090:32044/TCP 1ddefault kubernetes ClusterIP 10.90.0.1 <none> 443/TCP 1dkube-system cilium-envoy ClusterIP None <none> 9964/TCP 1dkube-system coredns ClusterIP 10.90.0.10 <none> 53/UDP 1dkube-system hubble-metrics ClusterIP None <none> 9965/TCP 1dkube-system hubble-peer ClusterIP 10.90.63.177 <none> 443/TCP 1dkube-system hubble-relay ClusterIP 10.90.157.4 <none> 80/TCP 1dkube-system hubble-ui NodePort 10.90.13.110 <none> 80:32166/TCP 1dkube-system metrics-server ClusterIP 10.90.61.226 <none> 443/TCP 1d浏览器访问http://10.88.12.61:31240grafana服务

浏览器访问http://10.88.12.61:32044prometheus服务

浏览器访问http://10.88.12.61:32166hubble-ui服务

六、安装CoreDNS

6.1.0 拉取CoreDNS安装包

koevn@k8s-master-01:~$ sudo helm repo add coredns https://coredns.github.io/helmkoevn@k8s-master-01:~$ sudo helm pull coredns/corednskoevn@k8s-master-01:~$ sudo tar xvf coredns-*.tgzkoevn@k8s-master-01:~$ cd coredns/修改values.yaml文件

koevn@k8s-master-01:~/coredns$ sudo vim values.yamlservice: clusterIP: "10.90.0.10" # 取消注释修改指定IP安装CoreDNS

koevn@k8s-master-01:~/coredns$ cd ..koevn@k8s-master-01:~$ sudo helm install coredns ./coredns/ -n kube-system \ -f coredns/values.yaml七、安装Metrics Server

下载Metrics-server yaml文件

koevn@k8s-master-01:~$ sudo wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml \ -O metrics-server.yaml编辑修改metrics-server.yaml文件

# 修改文件大约134行 - args: - --cert-dir=/tmp - --secure-port=10250 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls - --requestheader-client-ca-file=/opt/kubernetes/cert/front-proxy-ca.pem - --requestheader-username-headers=X-Remote-User - --requestheader-group-headers=X-Remote-Group - --requestheader-extra-headers-prefix=X-Remote-Extra-

# 修改文件大约182行 volumeMounts: - mountPath: /tmp name: tmp-dir - name: ca-ssl mountPath: /opt/kubernetes/cert volumes: - emptyDir: {} name: tmp-dir - name: ca-ssl hostPath: path: /opt/kubernetes/cert然后应用部署

koevn@k8s-master-01:~$ sudo kubectl apply -f metrics-server.yaml查看资源状态

koevn@k8s-master-01:~$ sudo kubectl top nodeNAME CPU(cores) CPU(%) MEMORY(bytes) MEMORY(%)k8s-master-01 212m 5% 2318Mi 61%k8s-master-02 148m 3% 2180Mi 57%k8s-master-03 178m 4% 2103Mi 55%k8s-worker-01 38m 1% 1377Mi 36%k8s-worker-02 40m 2% 1452Mi 38%k8s-worker-03 32m 1% 1475Mi 39%八、验证集群内部通信

8.1.0 部署一个pod资源

koevn@k8s-master-01:~$ cat > busybox.yaml << EOFapiVersion: v1kind: Podmetadata: name: busybox namespace: defaultspec: containers: - name: busybox image: harbor.koevn.com/library/busybox:1.37.0 # 这里我用的是私有仓库 command: - sleep - "3600" imagePullPolicy: IfNotPresent restartPolicy: AlwaysEOF然后执行部署pod资源

koevn@k8s-master-01:~$ sudo kubectl apply -f busybox.yaml查看pod资源

koevn@k8s-master-01:~$ sudo kubectl get podNAME READY STATUS RESTARTS AGEbusybox 1/1 Running 0 36s8.2.0 用pod解析NAMESPACE Services

查看Services

koevn@k8s-master-01:~$ sudo kubectl get svc -ANAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEcilium-monitoring grafana NodePort 10.90.138.192 <none> 3000:31240/TCP 1dcilium-monitoring prometheus NodePort 10.90.145.89 <none> 9090:32044/TCP 1ddefault kubernetes ClusterIP 10.90.0.1 <none> 443/TCP 1dkube-system cilium-envoy ClusterIP None <none> 9964/TCP 1dkube-system coredns ClusterIP 10.90.0.10 <none> 53/UDP 1dkube-system hubble-metrics ClusterIP None <none> 9965/TCP 1dkube-system hubble-peer ClusterIP 10.90.63.177 <none> 443/TCP 1dkube-system hubble-relay ClusterIP 10.90.157.4 <none> 80/TCP 1dkube-system hubble-ui NodePort 10.90.13.110 <none> 80:32166/TCP 1dkube-system metrics-server ClusterIP 10.90.61.226 <none> 443/TCP 1d测试解析

koevn@k8s-master-01:~$ sudo kubectl exec busybox -n default \ -- nslookup hubble-ui.kube-system.svc.cluster.localServer: 10.90.0.10Address: 10.90.0.10:53

Name: hubble-ui.kube-system.svc.cluster.localAddress: 10.90.13.110

koevn@k8s-master-01:~$ sudo kubectl exec busybox -n default \ -- nslookup grafana.cilium-monitoring.svc.cluster.localServer: 10.90.0.10Address: 10.90.0.10:53

Name: grafana.cilium-monitoring.svc.cluster.localAddress: 10.90.138.192

koevn@k8s-master-01:~$ sudo kubectl exec busybox -n default \ -- nslookup kubernetes.default.svc.cluster.localServer: 10.90.0.10Address: 10.90.0.10:53

Name: kubernetes.default.svc.cluster.localAddress: 10.90.0.18.3.0 每个节点测试kubernetes 443与coredns 53端口

koevn@k8s-master-01:~$ sudo telnet 10.90.0.1 443Trying 10.90.0.1...Connected to 10.90.0.1.Escape character is '^]'

koevn@k8s-master-01:~$ sudo nc -zvu 10.90.0.10 5310.90.0.10: inverse host lookup failed: Unknown host(UNKNOWN) [10.90.0.10] 53 (domain) open

koevn@k8s-worker-03:~$ sudo telnet 10.90.0.1 443Trying 10.90.0.1...Connected to 10.90.0.1.Escape character is '^]'

koevn@k8s-worker-03:~$ sudo nc -zvu 10.90.0.10 5310.90.0.10: inverse host lookup failed: Unknown host(UNKNOWN) [10.90.0.10] 53 (domain) open8.4.0 测试Pod之间网络互通

查看pod信息

koevn@k8s-master-01:~$ sudo kubectl get pods -A -o \custom-columns="NAMESPACE:.metadata.namespace,STATUS:.status.phase,NAME:.metadata.name,IP:.status.podIP"NAMESPACE STATUS NAME IPcilium-monitoring Running grafana-679cd8bff-w9xm9 10.100.4.249cilium-monitoring Running prometheus-87d4f66f7-6lmrz 10.100.3.238default Running busybox 10.100.4.232kube-system Running cilium-envoy-4sjq5 10.88.12.63kube-system Running cilium-envoy-64694 10.88.12.60kube-system Running cilium-envoy-fzjjw 10.88.12.62kube-system Running cilium-envoy-twtw6 10.88.12.61kube-system Running cilium-envoy-vwstr 10.88.12.65kube-system Running cilium-envoy-whck6 10.88.12.64kube-system Running cilium-fkjcm 10.88.12.63kube-system Running cilium-h75vq 10.88.12.64kube-system Running cilium-hcx4q 10.88.12.61kube-system Running cilium-jz44w 10.88.12.65kube-system Running cilium-operator-58d8755c44-hnwmd 10.88.12.60kube-system Running cilium-operator-58d8755c44-xmg9f 10.88.12.65kube-system Running cilium-qx5mn 10.88.12.62kube-system Running cilium-wqmzc 10.88.12.60kube-system Running coredns-6f44546d75-qnl9d 10.100.0.187kube-system Running hubble-relay-7cd9d88674-2tdcc 10.100.3.64kube-system Running hubble-ui-9f5cdb9bd-8fwsx 10.100.2.214kube-system Running metrics-server-76cb66cbf9-xfbzq 10.100.5.187进入busybox pod测试网络

koevn@k8s-master-01:~$ sudo kubectl exec -ti busybox -- sh/ # ping 10.100.4.249PING 10.100.4.249 (10.100.4.249): 56 data bytes64 bytes from 10.100.4.249: seq=0 ttl=63 time=0.472 ms64 bytes from 10.100.4.249: seq=1 ttl=63 time=0.063 ms64 bytes from 10.100.4.249: seq=2 ttl=63 time=0.064 ms64 bytes from 10.100.4.249: seq=3 ttl=63 time=0.062 ms

/ # ping 10.88.12.63PING 10.88.12.63 (10.88.12.63): 56 data bytes64 bytes from 10.88.12.63: seq=0 ttl=62 time=0.352 ms64 bytes from 10.88.12.63: seq=1 ttl=62 time=0.358 ms64 bytes from 10.88.12.63: seq=2 ttl=62 time=0.405 ms64 bytes from 10.88.12.63: seq=3 ttl=62 time=0.314 ms九、安装dashboard

9.1.0 拉取dashboard安装包

koevn@k8s-master-01:~$ sudo helm repo add kubernetes-dashboard \https://kubernetes.github.io/dashboard/

koevn@k8s-master-01:~$ sudo helm search repo \kubernetes-dashboard/kubernetes-dashboard --versions # 查看支持的版本NAME CHART VERSION APP VERSION DESCRIPTIONkubernetes-dashboard/kubernetes-dashboard 7.0.0 General-purpose web UI for Kubernetes clusterskubernetes-dashboard/kubernetes-dashboard 6.0.8 v2.7.0 General-purpose web UI for Kubernetes clusterskubernetes-dashboard/kubernetes-dashboard 6.0.7 v2.7.0 General-purpose web UI for Kubernetes clusters

koevn@k8s-master-01:~$ sudo helm pull kubernetes-dashboard/kubernetes-dashboard --version 6.0.8 # 指定版本koevn@k8s-master-01:~$ sudo tar xvf kubernetes-dashboard-*.tgzkoevn@k8s-master-01:~$ cd kubernetes-dashboard编辑修改values.yaml文件

image: repository: harbor.koevn.com/library/kubernetesui/dashboard # 这里我用的是私有仓库 tag: "v2.7.0" # 默认为空,自己指定版本9.2.0 部署dashboard

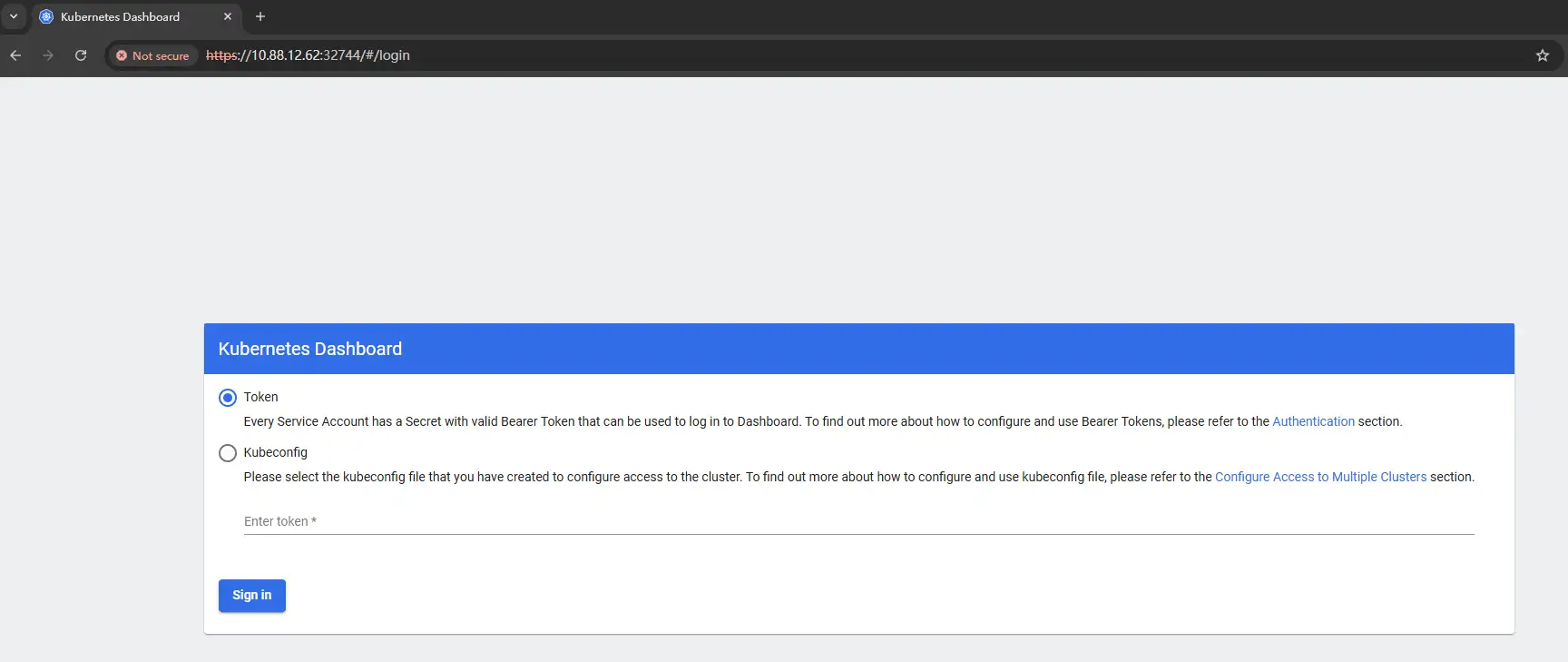

koevn@k8s-master-01:~/kubernetes-dashboard$ cd ..helm install dashboard kubernetes-dashboard/kubernetes-dashboard \ --version 6.0.8 \ --namespace kubernetes-dashboard \ --create-namespace9.3.0 临时token

koevn@k8s-master-01:~$ cat > dashboard-user.yaml << EOFapiVersion: v1kind: ServiceAccountmetadata: name: admin-user namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: admin-userroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-adminsubjects:- kind: ServiceAccount name: admin-user namespace: kube-systemEOF执行应用

koevn@k8s-master-01:~$ sudo kubectl apply -f dashboard-user.yaml创建临时token

koevn@k8s-master-01:~$ sudo kubectl -n kube-system create token admin-usereyJhbGciOiJSUzI1NiIsImtpZCI6IjRkV3lkZTU1dF9mazczemwwaUdxUElPWDZhejFyaDh2ZzRhZmN5RlN3T0EifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzUwOTExMzc1LCJpYXQiOjE3NTA5MDc3NzUsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiMmQwZmViMzItNDJlNy00ODE0LWJmYjUtOGU5MTFhNWZhZDM2Iiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiZmEwZGFhNjAtNWEzNC00NTFjLWFiYmUtNTQ2Y2EwOWVkNWQyIn19LCJuYmYiOjE3NTA5MDc3NzUsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.XbF8s70UTzrOnf8TrkyC7-3vdxLKQU1aQiCRGgRWyzh4e-A0uFgjVfQ17IrFsZEOVpE8H9ydNc3dZbP81apnGegeFZ42J7KmUkSUJnh5UbiKjmfWwK9ysoP-bba5nnq1uWB_iFR6r4vr6Q_B4-YyAn1DVy70VNaHrfyakyvpJ69L-5eH2jHXn68uizXdi4brf2YEAwDlmBWufeQqtPx7pdnF5HNMyt56oxQb2S2gNrgwLvb8WV2cIKE3DvjQYfcQeaufWK3gn0y-2h5-3z3r4084vHrXAYJRkPmOKy7Fh-DZ8t1g7icNfDRg4geI48WrMH2vOk3E_cpjRxS7dC5P9A9.4.0 创建长期token

koevn@k8s-master-01:~$ cat > dashboard-user-token.yaml << EOFapiVersion: v1kind: Secretmetadata: name: admin-user namespace: kube-system annotations: kubernetes.io/service-account.name: "admin-user"type: kubernetes.io/service-account-tokenEOF执行应用

koevn@k8s-master-01:~$ sudo kubectl apply -f dashboard-user-token.yaml查看token

koevn@k8s-master-01:~$ sudo kubectl get secret admin-user -n kube-system -o jsonpath={".data.token"} | base64 -deyJhbGciOiJSUzI1NiIsImtpZCI6IjRkV3lkZTU1dF9mazczemwwaUdxUElPWDZhejFyaDh2ZzRhZmN5RlN3T0EifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzUwOTExNzM5LCJpYXQiOjE3NTA5MDgxMzksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiMmZmNTE5NGEtOTlkMC00MDJmLTljNWUtMGQxOTMyZDkxNjgwIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiZmEwZGFhNjAtNWEzNC00NTFjLWFiYmUtNTQ2Y2EwOWVkNWQyIn19LCJuYmYiOjE3NTA5MDgxMzksInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.LJa37eblpk_OBWGqRgX2f_aCMiCrrpjY57dW8bskKUWu7ldLhgR6JIbICI0NVnvX4by3RX9v_FGnAPwU821VDp05oYT1KcTDXV1BC57G4QGL4kS9tBOrmRyXY0jxB8ETRmGx8ECiCJqNfrVdT99dm8oaFqJx1zq6jut70UwhxQCIh7C-QVqg6Gybbb3a9x25M2YvVHWStduN_swMOQxyQDBRtA0ARAyClu73o36pDCs_a56GizGspA4bvHpHPT-_y1i3EkeVjMsEl6JQ0PeJNQiM4fBvtJ2I_0kqoEHNMRYzZXEQNETXF9vqjkiEg7XBlKe1L2Ke1-xwK5ZBKFnPOg9.5.0 登录dashboard

修改dashboard servers

koevn@k8s-master-01:~$ sudo kubectl edit svc -n kube-system kubernetes-dashboard type: NodePort查看映射的端口

koevn@k8s-master-01:~$ sudo kubectl get svc kubernetes-dashboard -n kube-systemNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes-dashboard NodePort 10.90.211.135 <none> 443:32744/TCP 5d浏览器访问