A Kubernetes cluster deployed a few years ago needs to be gradually updated. Since I haven’t recorded it for a long time, I am a little unfamiliar with the deployment method. Now I try to sort out the updates for the new version of the cluster.

1.、env

1.1.0 Cluster env

| Hostname | IP | Role | software |

|---|---|---|---|

| k8s-master-01 | 10.88.12.60 | Master | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、haproxy、containerd |

| k8s-master-02 | 10.88.12.61 | Master | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、containerd |

| k8s-master-03 | 10.88.12.62 | Master | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、containerd |

| k8s-worker-01 | 10.88.12.63 | Worker | kubelet、containerd |

| k8s-worker-02 | 10.88.12.64 | Worker | kubelet、containerd |

| k8s-worker-03 | 10.88.12.65 | Worker | kubelet、containerd |

| 10.88.12.100 | VIP | This address is usually a drift address bound by keepalived. For simplicity, we will directly configure the new network card |

I think someone has noticed that there is no kube-proxy in the above cluster components, because this cluster uses cilium instead of kube-proxy, but kube-proxy is still included when deployed. At the same time, please note that each node must have the runc program

1.2.0 Network

| Type | IP/Mask | describe |

|---|---|---|

| Host | 10.88.12/24 | Physical or virtual machine network |

| Cluster | 10.90.0.0/16 | Cluster Services Network |

| Pod | 10.100.0.0/16 | Cluster Pod Network |

1.3.0 Software Version

| Software | Version |

|---|---|

| cfssl | 1.6.5 |

| containerd | v2.1.0 |

| crictl | 0.1.0 |

| runc | 1.3.0 |

| helm | v3.18.0 |

| os_kernel | 6.1.0-29 |

As for other basic system configurations, such as installing software dependency environments, modifying system kernel parameters, modifying system file descriptors, enabling certain kernel module functions (such as ipvs), cluster host time synchronization, disabling selinux, disabling swap partitions, etc., you can search and set them online according to your needs, so I will not describe them here.

2.Install the basic components of the Kubernetes cluster Master

2.1.0 Deploy containerd

All nodes in the cluster execute

# Unzip to the /usr/local/bin/ directorykevn@k8s-master-01:~$ sudo tar -xvf containerd-2.1.0-linux-amd64.tar.gz -C /usr/local/

# Create a startup service filekoevn@k8s-master-01:~$ sudo cat > /etc/systemd/system/containerd.service <<EOF[Unit]Description=containerd container runtimeDocumentation=https://containerd.ioAfter=network.target

[Service]ExecStart=/usr/local/bin/containerd --config /etc/containerd/config.tomlDelegate=yesKillMode=processRestart=alwaysLimitNOFILE=1048576LimitNPROC=infinityLimitCORE=infinity

[Install]WantedBy=multi-user.targetEOF

# Create containerd configuration filekoevn@k8s-master-01:~$ sudo mkdir -pv /etc/containerd/certs.d/harbor.koevn.comkoevn@k8s-master-01:~$ sudo containerd config default | tee /etc/containerd/config.tomltouch /etc/containerd/certs.d/harbor.koevn.com/hosts.toml

# Modify/etc/containerd/config.toml[plugins] [plugins.'io.containerd.cri.v1.images'] snapshotter = 'overlayfs' disable_snapshot_annotations = true discard_unpacked_layers = false max_concurrent_downloads = 3 image_pull_progress_timeout = '5m0s' image_pull_with_sync_fs = false stats_collect_period = 10

[plugins.'io.containerd.cri.v1.images'.pinned_images] sandbox = 'harbor.koevn.com/k8s/pause:3.10' # This image is pulled according to your own definition. Here I point to a private warehouse

[plugins.'io.containerd.cri.v1.images'.registry] config_path = "/etc/containerd/certs.d" # Configure the mirror repository related information here [plugins.'io.containerd.cri.v1.images'.image_decryption] key_model = 'node'

# Configuration /etc/containerd/certs.d/harbor.koevn.com/hosts.tomlserver = "https://harbor.koevn.com"

[host."https://harbor.koevn.com"] capabilities = ["pull", "resolve", "push"] skip_verify = true username = "admin" password = "K123456"

# Reload systemd managementkoevn@k8s-master-01:~$ sudo systemctl daemon-reload

# containerd service startedkoevn@k8s-master-01:~$ sudo systemctl start containerd.service

# containerd service statuskoevn@k8s-master-01:~$ sudo systemctl status containerd.service

# Containerd service starts automatically at bootkoevn@k8s-master-01:~$ sudo systemctl enable --now containerd.service2.1.1 Configuring crictl

# Unzipkoevn@k8s-master-01:~$ sudo tar xf crictl-v*-linux-amd64.tar.gz -C /usr/sbin/

# Generate configuration fileskoevn@k8s-master-01:~$ sudo cat > /etc/crictl.yaml <<EOFruntime-endpoint: unix:///run/containerd/containerd.sockimage-endpoint: unix:///run/containerd/containerd.sockEOF

# Checkcrictl info2.2.0 Deploy etcd

This service is deployed on three Master nodes

2.2.1 Install etcd env

# Move the downloaded cfssl tool to the /usr/local/bin directorykoevn@k8s-master-01:/tmp$ sudo mv cfssl_1.6.5_linux_amd64 /usr/local/bin/cfsslkoevn@k8s-master-01:/tmp$ sudo mv cfssljson_1.6.5_linux_amd64 /usr/local/bin/cfssljsonkoevn@k8s-master-01:/tmp$ sudo mv cfssl-certinfo_1.6.5_linux_amd64 /usr/local/bin/cfssl-certinfokoevn@k8s-master-01:/tmp$ sudo chmod +x /usr/local/bin/cfss*

# Create etcd service directorykoevn@k8s-master-01:~$ sudo mkdir -pv /opt/etcd/{bin,cert,config}

# Unzip the etcd installation filekoevn@k8s-master-01:/tmp$ sudo tar -xvf etcd*.tar.gz && mv etcd-*/etcd /opt/etcd/bin/

# Add etcd system environment variableskoevn@k8s-master-01:/tmp$ sudo echo "export PATH=/opt/etcd/bin:$PATH" > /etc/profile.d/etcd.sh && sudo source /etc/profile

# Create a certificate generation directorykoevn@k8s-master-01:/tmp$ sudo mkdir -pv /opt/cert/etcd2.2.2 Generate etcd certificate

Create a ca configuration file

koevn@k8s-master-01:/tmp$ cd /opt/certkoevn@k8s-master-01:/opt/cert$ sudo cat > ca-config.json << EOF{ "signing": { "default": { "expiry": "876000h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "876000h" } } }}EOFCreate a CA certificate signing request

koevn@k8s-master-01:/opt/cert$ sudo cat > etcd-ca-csr.json << EOF{ "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "Koevn", "OU": "Koevn Security" } ], "ca": { "expiry": "876000h" }}EOFGenerate etcd ca certificate

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /opt/cert/etcdCreate etcd request certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > etcd-csr.json << EOF{ "CN": "etcd", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "Koevn", "OU": "Koevn Security" } ]}EOFGenerate etcd certificate

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/etcd/etcd-ca.pem \ -ca-key=/opt/cert/etcd/etcd-ca-key.pem \ -config=ca-config.json \ -hostname=127.0.0.1,k8s-master-01,k8s-master-02,k8s-master-03,10.88.12.60,10.88.12.61,10.88.12.62 \ -profile=kubernetes \ etcd-csr.json | cfssljson -bare /opt/cert/etcdCopy the etcd*.pem certificates generated in the /opt/cert/etcd directory to the /opt/etcd/cert/ directory.

2.2.3 Create etcd configuration file

koevn@k8s-master-01:~$ sudo cat > /oet/etcd/config/etcd.config.yml << EOFname: 'k8s-master-01' # Note modify the name of each nodedata-dir: /data/etcd/datawal-dir: /data/etcd/walsnapshot-count: 5000heartbeat-interval: 100election-timeout: 1000quota-backend-bytes: 0listen-peer-urls: 'https://10.88.12.60:2380' # Modify the IP address that each node listens onlisten-client-urls: 'https://10.88.12.60:2379,http://127.0.0.1:2379'max-snapshots: 3max-wals: 5cors:initial-advertise-peer-urls: 'https://10.88.12.60:2380'advertise-client-urls: 'https://10.88.12.60:2379'discovery:discovery-fallback: 'proxy'discovery-proxy:discovery-srv:initial-cluster: 'k8s-master-01=https://10.88.12.60:2380,k8s-master-02=https://10.88.12.61:2380,k8s-master-03=https://10.88.12.62:2380'initial-cluster-token: 'etcd-k8s-cluster'initial-cluster-state: 'new'strict-reconfig-check: falseenable-v2: trueenable-pprof: trueproxy: 'off'proxy-failure-wait: 5000proxy-refresh-interval: 30000proxy-dial-timeout: 1000proxy-write-timeout: 5000proxy-read-timeout: 0client-transport-security: cert-file: '/opt/etcd/cert/etcd.pem' key-file: '/opt/etcd/cert/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/opt/etcd/cert/etcd-ca.pem' auto-tls: truepeer-transport-security: cert-file: '/opt/etcd/cert/etcd.pem' key-file: '/opt/etcd/cert/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/opt/etcd/cert/etcd-ca.pem' auto-tls: truedebug: falselog-package-levels:log-outputs: [default]force-new-cluster: falseEOFView the etcd service directory structure

koevn@k8s-master-01:~$ sudo tree /opt/etcd//opt/etcd/├── bin│ ├── etcd│ ├── etcdctl│ └── etcdutl├── cert│ ├── etcd-ca.pem│ ├── etcd-key.pem│ └── etcd.pem└── config └── etcd.config.yml

4 directories, 7 filesThen package etcd and distribute it to other Master nodes

koevn@k8s-master-01:~$ sudo tar -czvf /tmp/etcd.tar.gz /opt/etcd2.2.4 Each etcd node configures and starts the service

koevn@k8s-master-01:~$ sudo cat /etc/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServiceDocumentation=https://coreos.com/etcd/docs/latest/After=network.target

[Service]Type=notifyExecStart=/opt/etcd/bin/etcd --config-file=/opt/etcd/config/etcd.config.ymlRestart=on-failureRestartSec=10LimitNOFILE=65536

[Install]WantedBy=multi-user.targetAlias=etcd3.serviceEOFLoad system service systemctl daemon-reload

Start etcd service systemctl start etcd.service

Check etcd service status systemctl status etcd.service

Add automatic startup systemctl enable --now etcd.service

2.2.5 Check etcd status

koevn@k8s-master-01:~$ sudo export ETCDCTL_API=3root@k8s-master-01:~# etcdctl --endpoints="10.88.12.60:2379,10.88.12.61:2379,10.88.12.62:2379" \> --cacert=/opt/etcd/cert/etcd-ca.pem \> --cert=/opt/etcd/cert/etcd.pem \> --key=/opt/etcd/cert/etcd-key.pem \> endpoint status \> --write-out=table+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| 10.88.12.60:2379 | c1f013862d84cb12 | 3.5.21 | 35 MB | false | false | 158 | 8434769 | 8434769 | || 10.88.12.61:2379 | cea2c8779e4914d0 | 3.5.21 | 36 MB | false | false | 158 | 8434769 | 8434769 | || 10.88.12.62:2379 | df3b2276b87896db | 3.5.21 | 33 MB | true | false | 158 | 8434769 | 8434769 | |+------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+2.3.0 Generate k8s related certificates

Create a k8s certificate storage directory

koevn@k8s-master-01:~$ sudo mkdir -pv /opt/cert/kubernetes/pkikoevn@k8s-master-01:~$ sudo mkdir -pv /opt/kubernetes/cfg # Cluster kubeconfig file directorykoevn@k8s-master-01:~$ cd /opt/cert2.3.1 Generate k8s ca certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > ca-csr.json << EOF{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "Kubernetes", "OU": "Kubernetes-manual" } ], "ca": { "expiry": "876000h" }}EOFGenerate CA certificate

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert -initca ca-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/ca2.3.2 Generate apiserver certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > apiserver-csr.json << EOF{ "CN": "kube-apiserver", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "Kubernetes", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-ca=/opt/cert/kubernetes/pki/ca.pem \-ca-key=/opt/cert/kubernetes/pki/ca-key.pem \-config=ca-config.json \-hostname=10.90.0.1,10.88.12.100,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,k8s.koevn.com,10.88.12.60,10.88.12.61,10.88.12.62,10.88.12.63,10.88.12.64,10.88.12.65,10.88.12.66,10.88.12.67,10.88.12.68 \-profile=kubernetes apiserver-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/apiserver2.3.3 Generate apiserver aggregate certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > front-proxy-ca-csr.json << EOF{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "ca": { "expiry": "876000h" }}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-initca front-proxy-ca-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/front-proxy-ca

koevn@k8s-master-01:/opt/cert$ sudo cat > front-proxy-client-csr.json << EOF{ "CN": "front-proxy-client", "key": { "algo": "rsa", "size": 2048 }}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-ca=/opt/cert/kubernetes/pki/front-proxy-ca.pem \-ca-key=/opt/cert/kubernetes/pki/front-proxy-ca-key.pem \-config=ca-config.json \-profile=kubernetes \front-proxy-client-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/front-proxy-client2.3.4 Generate controller-manage certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > manager-csr.json << EOF{ "CN": "system:kube-controller-manager", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "Beijing", "L": "Beijing", "O": "system:kube-controller-manager", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \-ca=/opt/cert/kubernetes/pki/front-proxy-ca.pem \-ca-key=/opt/cert/kubernetes/pki/front-proxy-ca-key.pem \-config=ca-config.json \-profile=kubernetes \manager-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/controller-manager

Set cluster items```bashkoevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig⚠️ 注意 The cluster item

--serverconfiguration must point to the keepalived high-availability VIP, and port 9443 is the port that haproxy listens on.

Setting the environment item context

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context system:kube-controller-manager@kubernetes \ --cluster=kubernetes \ --user=system:kube-controller-manager \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfigSetting a User Item

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials system:kube-controller-manager \ --client-certificate=/opt/cert/kubernetes/pki/controller-manager.pem \ --client-key=/opt/cert/kubernetes/pki/controller-manager-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfigSetting the default environment

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context system:kube-controller-manager@kubernetes \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig2.3.5 Generate kube-scheduler certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > scheduler-csr.json << EOF{ "CN": "system:kube-scheduler", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "system:kube-scheduler", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/kubernetes/pki/ca.pem \ -ca-key=/opt/cert/kubernetes/pki/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ scheduler-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/scheduler

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials system:kube-scheduler \ --client-certificate=/opt/cert/kubernetes/pki/scheduler.pem \ --client-key=/opt/cert/kubernetes/pki/scheduler-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context system:kube-scheduler@kubernetes \ --cluster=kubernetes \ --user=system:kube-scheduler \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context system:kube-scheduler@kubernetes \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig2.3.6 Generate admin certificate

koevn@k8s-master-01:/opt/cert$ sudo cat > admin-csr.json << EOF{ "CN": "admin", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "system:masters", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/kubernetes/pki/ca.pem \ -ca-key=/opt/cert/kubernetes/pki/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ admin-csr.json | cfssljson -bare /opt/cert/kubernetes/pki/admin

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials kubernetes-admin \ --client-certificate=/opt/cert/kubernetes/pki//admin.pem \ --client-key=/opt/cert/kubernetes/pki//admin-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context kubernetes-admin@kubernetes \ --cluster=kubernetes \ --user=kubernetes-admin \ --kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context kubernetes-admin@kubernetes \--kubeconfig=/opt/kubernetes/cfg/admin.kubeconfig2.3.7 Generate kube-proxy certificate (optional)

koevn@k8s-master-01:/opt/cert$ sudo cat > kube-proxy-csr.json << EOF{ "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "US", "ST": "California", "L": "Los Angeles", "O": "system:kube-proxy", "OU": "Kubernetes-manual" } ]}EOF

koevn@k8s-master-01:/opt/cert$ sudo cfssl gencert \ -ca=/opt/cert/kubernetes/pki/ca.pem \ -ca-key=/opt/cert/kubernetes/pki/ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ kube-proxy-csr.json | cfssljson -bare /opt/kubernetes/cfg/kube-proxy

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-cluster kubernetes \ --certificate-authority=/opt/cert/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.88.12.100:9443 \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-credentials kube-proxy \ --client-certificate=/opt/cert/kubernetes/pki/kube-proxy.pem \ --client-key=/opt/cert/kubernetes/pki/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config set-context kube-proxy@kubernetes \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig

koevn@k8s-master-01:/opt/cert$ sudo kubectl config use-context kube-proxy@kubernetes \--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig2.3.8 Create a ServiceAccount Key

koevn@k8s-master-01:/opt/cert$ sudo openssl genrsa -out /opt/cert/kubernetes/pki/sa.key 2048koevn@k8s-master-01:/opt/cert$ sudo openssl rsa -in /opt/cert/kubernetes/pki/sa.key \-pubout -out /opt/cert/kubernetes/pki/sa.pub2.3.9 View the number of k8s component certificates

koevn@k8s-master-01:~$ ls /opt/cert/kubernetes/pki-rw-r--r-- 1 root root 1025 May 20 17:11 admin.csr-rw------- 1 root root 1679 May 20 17:11 admin-key.pem-rw-r--r-- 1 root root 1444 May 20 17:11 admin.pem-rw-r--r-- 1 root root 1383 May 20 16:25 apiserver.csr-rw------- 1 root root 1675 May 20 16:25 apiserver-key.pem-rw-r--r-- 1 root root 1777 May 20 16:25 apiserver.pem-rw-r--r-- 1 root root 1070 May 20 11:58 ca.csr-rw------- 1 root root 1679 May 20 11:58 ca-key.pem-rw-r--r-- 1 root root 1363 May 20 11:58 ca.pem-rw-r--r-- 1 root root 1082 May 20 16:38 controller-manager.csr-rw------- 1 root root 1679 May 20 16:38 controller-manager-key.pem-rw-r--r-- 1 root root 1501 May 20 16:38 controller-manager.pem-rw-r--r-- 1 root root 940 May 20 16:26 front-proxy-ca.csr-rw------- 1 root root 1675 May 20 16:26 front-proxy-ca-key.pem-rw-r--r-- 1 root root 1094 May 20 16:26 front-proxy-ca.pem-rw-r--r-- 1 root root 903 May 20 16:30 front-proxy-client.csr-rw------- 1 root root 1679 May 20 16:30 front-proxy-client-key.pem-rw-r--r-- 1 root root 1188 May 20 16:30 front-proxy-client.pem-rw-r--r-- 1 root root 1045 May 20 18:30 kube-proxy.csr-rw------- 1 root root 1679 May 20 18:30 kube-proxy-key.pem-rw-r--r-- 1 root root 1464 May 20 18:30 kube-proxy.pem-rw------- 1 root root 1704 May 21 09:21 sa.key-rw-r--r-- 1 root root 451 May 21 09:21 sa.pub-rw-r--r-- 1 root root 1058 May 20 17:00 scheduler.csr-rw------- 1 root root 1679 May 20 17:00 scheduler-key.pem-rw-r--r-- 1 root root 1476 May 20 17:00 scheduler.pem

koevn@k8s-master-01:~$ ls /opt/cert/kubernetes/pki/ | wc -l # View Total262.3.10 Install kubernetes components and certificate distribution (all Master nodes)

koevn@k8s-master-01:~$ sudo mkdir -pv /opt/kubernetes/{bin,etc,cert,cfg}koevn@k8s-master-01:~$ sudo tree /opt/kubernetes # View file hierarchy/opt/kubernetes├── bin│ ├── kube-apiserver│ ├── kube-controller-manager│ ├── kubectl│ ├── kubelet│ ├── kube-proxy│ └── kube-scheduler├── cert│ ├── admin-key.pem│ ├── admin.pem│ ├── apiserver-key.pem│ ├── apiserver.pem│ ├── ca-key.pem│ ├── ca.pem│ ├── controller-manager-key.pem│ ├── controller-manager.pem│ ├── front-proxy-ca.pem│ ├── front-proxy-client-key.pem│ ├── front-proxy-client.pem│ ├── kube-proxy-key.pem│ ├── kube-proxy.pem│ ├── sa.key│ ├── sa.pub│ ├── scheduler-key.pem│ └── scheduler.pem├── cfg│ ├── admin.kubeconfig│ ├── bootstrap-kubelet.kubeconfig│ ├── bootstrap.secret.yaml│ ├── controller-manager.kubeconfig│ ├── kubelet.kubeconfig│ ├── kube-proxy.kubeconfig│ └── scheduler.kubeconfig├── etc│ ├── kubelet-conf.yml│ └── kube-proxy.yaml└── manifests

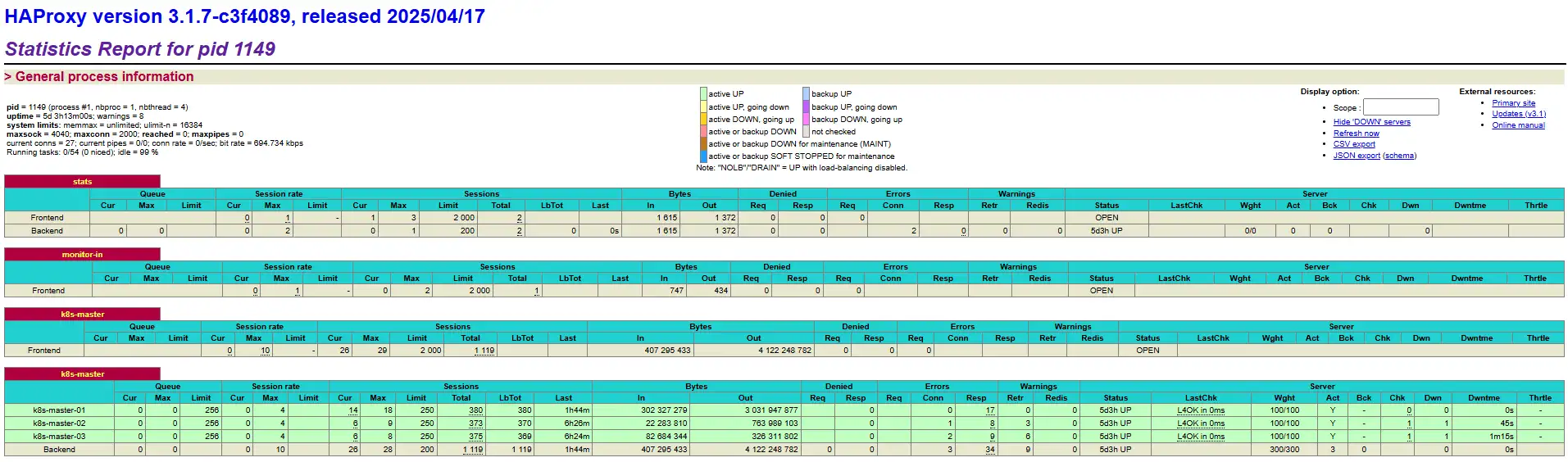

6 directories, 32 files2.4.0 Deploy Haproxy load balancing

⚠️ 注意 This step configures the high availability of the apiserver using a combination of Haproxy and Keepalived. In order to omit this step, I directly add a network card to the Master-01 node with a fake VIP address. In the production environment, it is best to use a combination of Haproxy and Keepalived to improve redundancy.

2.4.1 Install Haproxy

koevn@k8s-master-01:~$ sudo apt install haproxy2.4.2 Modify the haproxy configuration file

koevn@k8s-master-01:~$ sudo cat >/etc/haproxy/haproxy.cfg << EOFglobal maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s

defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s

listen stats mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth admin:K123456

frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor

frontend k8s-master bind 0.0.0.0:9443 bind 127.0.0.1:9443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master

backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master-01 10.88.12.60:6443 check server k8s-master-02 10.88.12.61:6443 check server k8s-master-03 10.88.12.62:6443 checkEOFStart the service

koevn@k8s-master-01:~$ sudo systemctl daemon-reloadkoevn@k8s-master-01:~$ sudo systemctl enable --now haproxy.serviceVisit http://10.88.12.100:9999/haproxy-status through the browser to view the haproxy status

2.5.0 Configure apiserver service (all Master nodes)

Configure apiserver to start the service

koevn@k8s-master-01:~$ sudo cat /etc/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-apiserver \ --v=2 \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=6443 \ --advertise-address=10.88.12.60 \ # Note Change the IP address of each Master node --service-cluster-ip-range=10.90.0.0/16 \ # The cluster network segment is configured according to the usage --service-node-port-range=30000-32767 \ --etcd-servers=https://10.88.12.60:2379,https://10.88.12.61:2379,https://10.88.12.62:2379 \ --etcd-cafile=/opt/etcd/cert/etcd-ca.pem \ --etcd-certfile=/opt/etcd/cert/etcd.pem \ --etcd-keyfile=/opt/etcd/cert/etcd-key.pem \ --client-ca-file=/opt/kubernetes/cert/ca.pem \ --tls-cert-file=/opt/kubernetes/cert/apiserver.pem \ --tls-private-key-file=/opt/kubernetes/cert/apiserver-key.pem \ --kubelet-client-certificate=/opt/kubernetes/cert/apiserver.pem \ --kubelet-client-key=/opt/kubernetes/cert/apiserver-key.pem \ --service-account-key-file=/opt/kubernetes/cert/sa.pub \ --service-account-signing-key-file=/opt/kubernetes/cert/sa.key \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --requestheader-client-ca-file=/opt/kubernetes/cert/front-proxy-ca.pem \ --proxy-client-cert-file=/opt/kubernetes/cert/front-proxy-client.pem \ --proxy-client-key-file=/opt/kubernetes/cert/front-proxy-client-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User \ --enable-aggregator-routing=trueRestart=on-failureRestartSec=10sLimitNOFILE=65535

[Install]WantedBy=multi-user.targetEOFReload systemd management

koevn@k8s-master-01:~$ sudo systemctl daemon-reloadStart kube-apiserver

koevn@k8s-master-01:~$ sudo systemctl enable --now kube-apiserver.serviceCheck the kube-apiserver service status

koevn@k8s-master-01:~$ sudo systemctl status kube-apiserver.service2.6.0 Configure kube-controller-manager service (all Master nodes)

Configure kube-controller-manager service to start the service

koevn@k8s-master-01:~$ sudo cat /etc/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-controller-manager \ --v=2 \ --root-ca-file=/opt/kubernetes/cert/ca.pem \ --cluster-signing-cert-file=/opt/kubernetes/cert/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/cert/ca-key.pem \ --service-account-private-key-file=/opt/kubernetes/cert/sa.key \ --kubeconfig=/opt/kubernetes/cfg/controller-manager.kubeconfig \ --leader-elect=true \ --use-service-account-credentials=true \ --node-monitor-grace-period=40s \ --node-monitor-period=5s \ --controllers=*,bootstrapsigner,tokencleaner \ --allocate-node-cidrs=true \ --service-cluster-ip-range=10.90.0.0/16 \ # Cluster services network changes based on usage --cluster-cidr=10.100.0.0/16 \ # Cluster pod network changes based on usage --node-cidr-mask-size-ipv4=24 \ # The network subnet mask is set to 24 --requestheader-client-ca-file=/opt/kubernetes/cert/front-proxy-ca.pem

Restart=alwaysRestartSec=10s

[Install]WantedBy=multi-user.targetEOFReload systemd management

koevn@k8s-master-01:~$ sudo systemctl daemon-reloadStart kube-apiserver

koevn@k8s-master-01:~$ sudo systemctl enable --now kube-controller-manager.serviceCheck the kube-apiserver service status

koevn@k8s-master-01:~$ sudo systemctl status kube-controller-manager.service2.7.0 Configure kube-scheduler service (all Master nodes)

Configure kube-scheduler service and start the service

koevn@k8s-master-01:~$ sudo cat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-scheduler \ --v=2 \ --bind-address=0.0.0.0 \ --leader-elect=true \ --kubeconfig=/opt/kubernetes/cfg/scheduler.kubeconfig

Restart=alwaysRestartSec=10s

[Install]WantedBy=multi-user.targetEOFReload systemd management

koevn@k8s-master-01:~$ sudo systemctl daemon-reloadStart kube-apiserver

koevn@k8s-master-01:~$ sudo systemctl enable --now kube-scheduler.serviceCheck the kube-apiserver service status

koevn@k8s-master-01:~$ sudo systemctl status kube-scheduler.service2.8.0 TLS Bootstrapping Configuration

Create cluster configuration items

koevn@k8s-master-01:~$ sudo kubectl config set-cluster kubernetes \--certificate-authority=/opt/cert/kubernetes/pki/ca.pem \--embed-certs=true \--server=https://10.88.12.100:9443 \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfigCreating a token

koevn@k8s-master-01:~$ sudo echo "$(head -c 6 /dev/urandom | md5sum | head -c 6)"."$(head -c 16 /dev/urandom | md5sum | head -c 16)"79b841.0677456fb3b47289Setting Credentials

koevn@k8s-master-01:~$ sudo kubectl config set-credentials tls-bootstrap-token-user \--token=79b841.0677456fb3b47289 \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfigSetting context information

koevn@k8s-master-01:~$ sudo kubectl config set-context tls-bootstrap-token-user@kubernetes \--cluster=kubernetes \--user=tls-bootstrap-token-user \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig

koevn@k8s-master-01:~$ sudo kubectl config use-context tls-bootstrap-token-user@kubernetes \--kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfigConfigure the current user to manage the cluster

mkdir -pv /home/koevn/.kube && cp /opt/kubernetes/cfg/admin.kubeconfig /home/koevn/.kube/configCheck the cluster status

koevn@k8s-master-01:~$ sudo kubectl get cs # View the health status of core components of the Kubernetes control planeWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORetcd-0 Healthy okcontroller-manager Healthy okscheduler Healthy okApply bootstrap-token

koevn@k8s-master-01:~$ sudo cat > bootstrap.secret.yaml << EOFapiVersion: v1kind: Secretmetadata: name: bootstrap-token-79b841 namespace: kube-systemtype: bootstrap.kubernetes.io/tokenstringData: description: "The default bootstrap token generated by 'kubelet '." token-id: 79b841 token-secret: 0677456fb3b47289 usage-bootstrap-authentication: "true" usage-bootstrap-signing: "true" auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: kubelet-bootstraproleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node-bootstrappersubjects:- apiGroup: rbac.authorization.k8s.io kind: Group name: system:bootstrappers:default-node-token---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: node-autoapprove-bootstraproleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:nodeclientsubjects:- apiGroup: rbac.authorization.k8s.io kind: Group name: system:bootstrappers:default-node-token---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: node-autoapprove-certificate-rotationroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclientsubjects:- apiGroup: rbac.authorization.k8s.io kind: Group name: system:nodes---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:kube-apiserver-to-kubeletrules: - apiGroups: - "" resources: - nodes/proxy - nodes/stats - nodes/log - nodes/spec - nodes/metrics verbs: - "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: system:kube-apiserver namespace: ""roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:kube-apiserver-to-kubeletsubjects: - apiGroup: rbac.authorization.k8s.io kind: User name: kube-apiserverCreate bootstrap.secret configuration to the cluster

kubectl create -f bootstrap.secret.yaml3. Install the basic components of the Kubernetes cluster Worker

3.1.0 Required installation directory structure

koevn@k8s-worker-01:~$ sudo mkdir -pv /opt/kubernetes/{bin,cert,cfg,etc}koevn@k8s-worker-01:~$ sudo tree /opt/kubernetes/ # View the directory structure/opt/kubernetes/├── bin│ ├── kubectl│ ├── kubelet│ └── kube-proxy├── cert # Copy the certificate file from the master node│ ├── ca.pem│ ├── front-proxy-ca.pem│ ├── kube-proxy-key.pem│ └── kube-proxy.pem├── cfg # The kubeconfig file is copied from the master node│ ├── bootstrap-kubelet.kubeconfig│ ├── kubelet.kubeconfig│ └── kube-proxy.kubeconfig├── etc│ ├── kubelet-conf.yml│ └── kube-proxy.yaml└── manifests

6 directories, 12 filesKubernetes Worker nodes only need to install the Containerd and kubelet components. Although kube-proxy is not necessary, you can still install it and deploy it according to your needs.

3.2.0 Deploy kubelet service

This component needs to be deployed to all nodes in the cluster, and then deployed to other nodes accordingly. Add kubelet’s service file

koevn@k8s-worker-01:~$ sudo cat > /usr/lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletDocumentation=https://github.com/kubernetes/kubernetesAfter=network-online.target firewalld.service containerd.serviceWants=network-online.targetRequires=containerd.service

[Service]ExecStart=/opt/kubernetes/bin/kubelet \ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap-kubelet.kubeconfig \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --config=/opt/kubernetes/etc/kubelet-conf.yml \ --container-runtime-endpoint=unix:///run/containerd/containerd.sock \ --node-labels=node.kubernetes.io/node=

[Install]WantedBy=multi-user.targetEOFCreate and edit the kubelet configuration file

koevn@k8s-worker-01:~$ sudo cat > /etc/kubernetes/kubelet-conf.yml <<EOFapiVersion: kubelet.config.k8s.io/v1beta1kind: KubeletConfigurationaddress: 0.0.0.0port: 10250readOnlyPort: 10255authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/cert/ca.pemauthorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30scgroupDriver: systemdcgroupsPerQOS: trueclusterDNS:- 10.90.0.10clusterDomain: cluster.localcontainerLogMaxFiles: 5containerLogMaxSize: 10MicontentType: application/vnd.kubernetes.protobufcpuCFSQuota: truecpuManagerPolicy: nonecpuManagerReconcilePeriod: 10senableControllerAttachDetach: trueenableDebuggingHandlers: trueenforceNodeAllocatable:- podseventBurst: 10eventRecordQPS: 5evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5%evictionPressureTransitionPeriod: 5m0sfailSwapOn: truefileCheckFrequency: 20shairpinMode: promiscuous-bridgehealthzBindAddress: 127.0.0.1healthzPort: 10248httpCheckFrequency: 20simageGCHighThresholdPercent: 85imageGCLowThresholdPercent: 80imageMinimumGCAge: 2m0siptablesDropBit: 15iptablesMasqueradeBit: 14kubeAPIBurst: 10kubeAPIQPS: 5makeIPTablesUtilChains: truemaxOpenFiles: 1000000maxPods: 110nodeStatusUpdateFrequency: 10soomScoreAdj: -999podPidsLimit: -1registryBurst: 10registryPullQPS: 5resolvConf: /etc/resolv.confrotateCertificates: trueruntimeRequestTimeout: 2m0sserializeImagePulls: truestaticPodPath: /opt/kubernetes/manifestsstreamingConnectionIdleTimeout: 4h0m0ssyncFrequency: 1m0svolumeStatsAggPeriod: 1m0sStart kubelet

koevn@k8s-worker-01:~$ sudo systemctl daemon-reload # Reload the systemd management unitkoevn@k8s-worker-01:~$ sudo systemctl enable --now kubelet.service # Enable and immediately start the kubelet.service unitkoevn@k8s-worker-01:~$ sudo systemctl status kubelet.service # View the current status of the kubelet.service service3.3.0 Check the status of cluster nodes

koevn@k8s-master-01:~$ sudo kubectl get nodeNAME STATUS ROLES AGE VERSIONk8s-master-01 NotReady <none> 50s v1.33.0k8s-master-02 NotReady <none> 47s v1.33.0k8s-master-03 NotReady <none> 40s v1.33.0k8s-worker-01 NotReady <none> 35s v1.33.0k8s-worker-02 NotReady <none> 28s v1.33.0k8s-worker-03 NotReady <none> 11s v1.33.0Since the CNI network plug-in is not installed in the Kubernetes cluster at this time, it is normal for the node STATUS to be in the

NotReadystate. Only when the CNI network plug-in runs normally without errors, the state isReady.

3.4.0 View the Runtime version information of the cluster nodes

koevn@k8s-master-01:~$ sudo kubectl describe node | grep Runtime Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.0 Container Runtime Version: containerd://2.1.03.5.0 Deploy kube-proxy service (optional)

This component needs to be deployed to all nodes in the cluster, and then deployed to other nodes accordingly. Add the kube-proxy service file

koevn@k8s-worker-01:~$ sudo cat > /usr/lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes Kube ProxyDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target

[Service]ExecStart=/opt/kubernetes/bin/kube-proxy \ --config=/opt/kubernetes/etc/kube-proxy.yaml \ --cluster-cidr=10.100.0.0/16 \ --v=2Restart=alwaysRestartSec=10s

[Install]WantedBy=multi-user.targetEOFCreate and edit the kube-proxy configuration file

koevn@k8s-worker-01:~$ sudo cat > /etc/kubernetes/kube-proxy.yaml << EOFapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0clientConnection: acceptContentTypes: "" burst: 10 contentType: application/vnd.kubernetes.protobuf kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig qps: 5clusterCIDR: 10.100.0.0/16configSyncPeriod: 15m0sconntrack: max: null maxPerCore: 32768 min: 131072 tcpCloseWaitTimeout: 1h0m0s tcpEstablishedTimeout: 24h0m0senableProfiling: falsehealthzBindAddress: 0.0.0.0:10256hostnameOverride: ""iptables: masqueradeAll: false masqueradeBit: 14 minSyncPeriod: 0s syncPeriod: 30sipvs: masqueradeAll: true minSyncPeriod: 5s scheduler: "rr" syncPeriod: 30skind: KubeProxyConfigurationmetricsBindAddress: 127.0.0.1:10249mode: "ipvs"nodePortAddresses: nulloomScoreAdj: -999portRange: ""udpIdleTimeout: 250msEOFStart kubelet

koevn@k8s-worker-01:~$ sudo systemctl daemon-reload # Reload the systemd management unitkoevn@k8s-worker-01:~$ sudo systemctl enable --now kube-proxy.service # Enable and immediately start the kubelet.service unitkoevn@k8s-worker-01:~$ sudo systemctl status kube-proxy.service # View the current status of the kubelet.service service4. Installing the Cluster Network Plugin

⚠️ 注意 The network plug-in installed this time is cilium. With this plug-in taking over the Kubernetes cluster network, the kube-proxy service must be shut down with

systemctl stop kube-proxy.serviceto avoid interference with cilium. Deploy cilium to operate on any node of the Master.

4.1.0 Install Helm

koevn@k8s-master-01:~$ sudo curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3koevn@k8s-master-01:~$ sudo chmod 700 get_helm.shkoevn@k8s-master-01:~$ sudo ./get_helm.shkoevn@k8s-master-01:~$ sudo tar xvf helm-*-linux-amd64.tar.gzkoevn@k8s-master-01:~$ sudo cp linux-amd64/helm /usr/local/bin/4.2.0 Install cilium

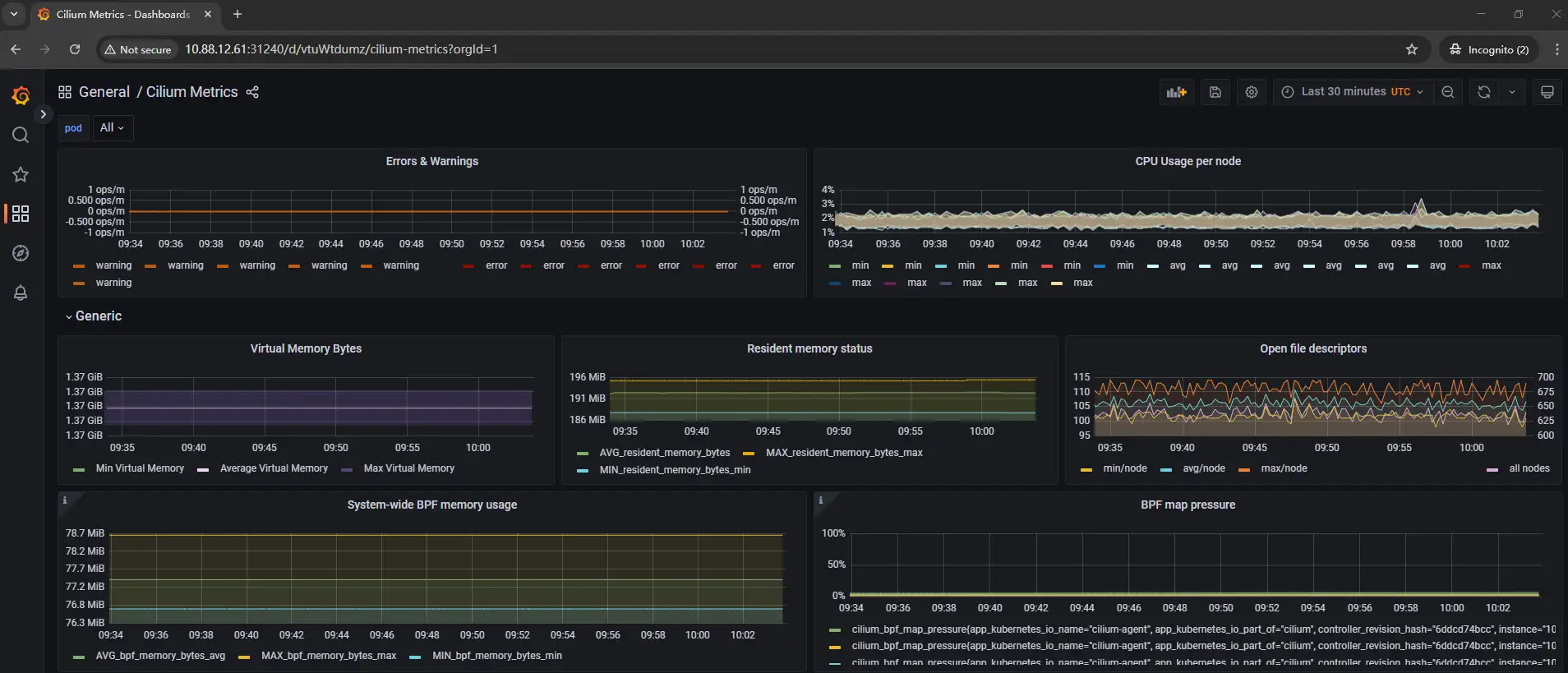

koevn@k8s-master-01:~$ sudo helm repo add cilium https://helm.cilium.io # Add Sourcekoevn@k8s-master-01:~$ sudo helm search repo cilium/cilium --versions # Search for supported versionskoevn@k8s-master-01:~$ sudo helm pull cilium/cilium # Pull the cilium installation packagekoevn@k8s-master-01:~$ sudo tar xvf cilium-*.tgzkoevn@k8s-master-01:~$ sudo cd cilium/Edit and modify the following contents of the values.yaml file in the cilium directory

# ------ Other configurations omitted ------#

kubeProxyReplacement: "true" # Uncomment and change to truek8sServiceHost: "kubernetes.default.svc.cluster.local" # This domain needs to be bound according to the apiserver certificate you generatedk8sServicePort: "9443" # apiserver protipv4NativeRoutingCIDR: "10.100.0.0/16" # Setting up Pod IPv4 Networkhubble: # Enable specific Hubble metrics metrics: enabled: - dns - drop - tcp - flow - icmp - httpV2

# ------ Other configurations omitted ------#⚠️ 注意 Here, for the

repositoryconfiguration parameters in all other configuration files, it is recommended to deploy theharborprivate warehouse if the network environment is generally not good to avoid the failure of the clustercontainerdcomponent to pull the image, resulting in the failure ofPodto run normally

Other features are enabled according to the configuration parameter level

- Enable Hubble Relay aggregation service

hubble.relay.enabled=true - Enable Hubble UI frontend (visualization page)

hubble.ui.enabled=true - Enable Prometheus metrics output for Cilium

prometheus.enabled=true - Enable Prometheus metrics output for Operators

operator.prometheus.enabled=true - Enable Hubble

hubble.enabled=true

The above paragraph is a simplified representation based on the

values.yamlconfiguration file hierarchy. You can also add--set hubble.relay.enabled=trueto enable the specified function through the cilium-cli command

Install cilium

koevn@k8s-master-01:~$ sudo helm upgrade cilium ./cilium \ --namespace kube-system \ --create-namespace \ -f cilium/values.yamlView Status

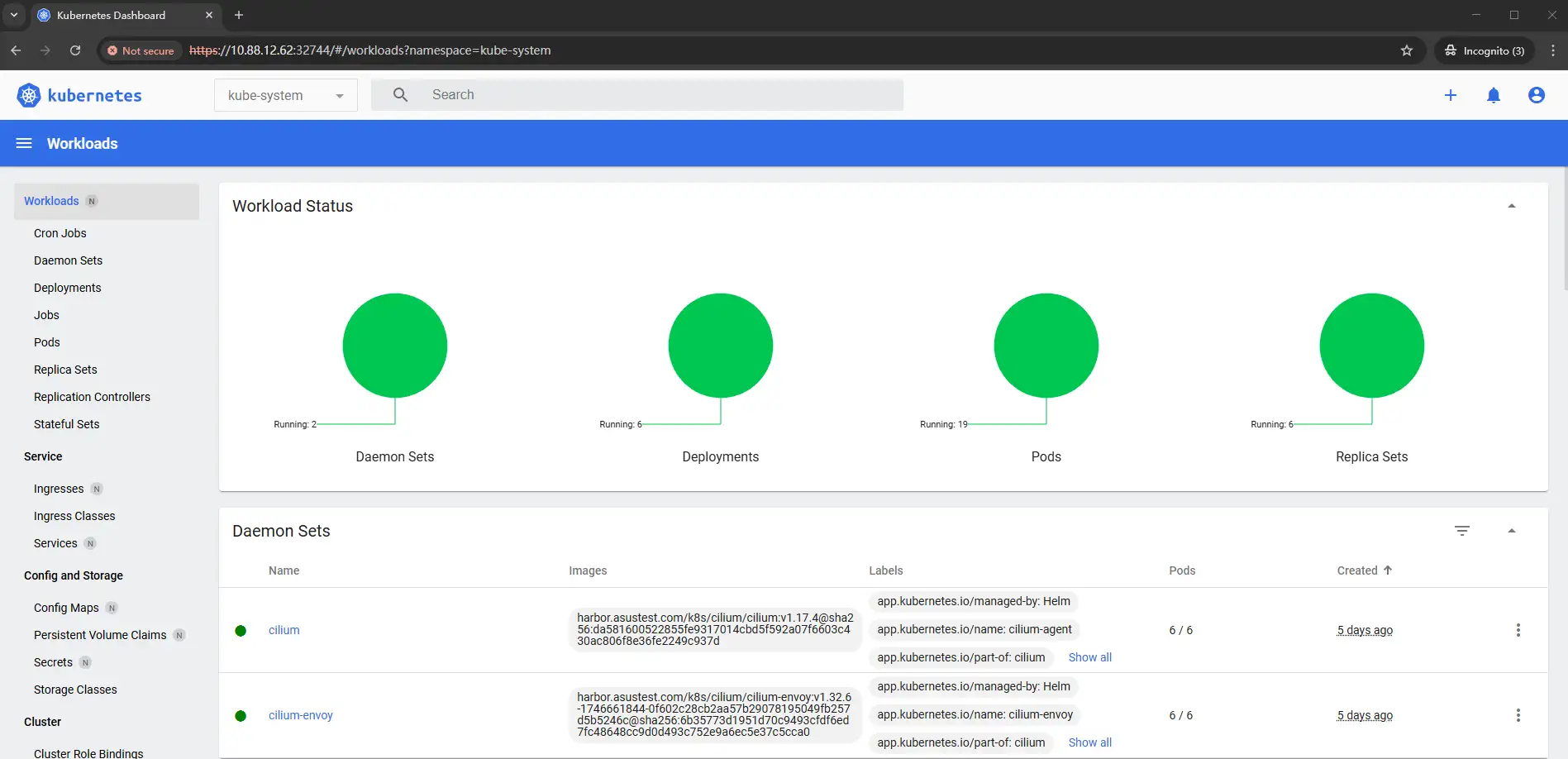

koevn@k8s-master-01:~$ sudo kubectl get pod -A | grep cilcilium-monitoring grafana-679cd8bff-w9xm9 1/1 Running 0 5dcilium-monitoring prometheus-87d4f66f7-6lmrz 1/1 Running 0 5dkube-system cilium-envoy-4sjq5 1/1 Running 0 5dkube-system cilium-envoy-64694 1/1 Running 0 5dkube-system cilium-envoy-fzjjw 1/1 Running 0 5dkube-system cilium-envoy-twtw6 1/1 Running 0 5dkube-system cilium-envoy-vwstr 1/1 Running 0 5dkube-system cilium-envoy-whck6 1/1 Running 0 5dkube-system cilium-fkjcm 1/1 Running 0 5dkube-system cilium-h75vq 1/1 Running 0 5dkube-system cilium-hcx4q 1/1 Running 0 5dkube-system cilium-jz44w 1/1 Running 0 5dkube-system cilium-operator-58d8755c44-hnwmd 1/1 Running 57 (84m ago) 5dkube-system cilium-operator-58d8755c44-xmg9f 1/1 Running 54 (82m ago) 5dkube-system cilium-qx5mn 1/1 Running 0 5dkube-system cilium-wqmzc 1/1 Running 0 5d5. Deployment monitoring panel

5.1.0 Creating a monitoring service

koevn@k8s-master-01:~$ sudo wget https://raw.githubusercontent.com/cilium/cilium/1.12.1/examples/kubernetes/addons/prometheus/monitoring-example.yamlkoevn@k8s-master-01:~$ sudo kubectl apply -f monitoring-example.yaml5.2.0 Change the cluster Services Type to NodePort

View Current Services

koevn@k8s-master-01:~$ sudo kubectl get svc -ANAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEcilium-monitoring grafana ClusterIP 10.90.138.192 <none> 3000/TCP 1dcilium-monitoring prometheus ClusterIP 10.90.145.89 <none> 9090/TCP 1ddefault kubernetes ClusterIP 10.90.0.1 <none> 443/TCP 1dkube-system cilium-envoy ClusterIP None <none> 9964/TCP 1dkube-system coredns ClusterIP 10.90.0.10 <none> 53/UDP 1dkube-system hubble-metrics ClusterIP None <none> 9965/TCP 1dkube-system hubble-peer ClusterIP 10.90.63.177 <none> 443/TCP 1dkube-system hubble-relay ClusterIP 10.90.157.4 <none> 80/TCP 1dkube-system hubble-ui ClusterIP 10.90.13.110 <none> 80/TCP 1dkube-system metrics-server ClusterIP 10.90.61.226 <none> 443/TCP 1dModify Services Type

koevn@k8s-master-01:~$ sudo kubectl edit svc -n cilium-monitoring grafanakoevn@k8s-master-01:~$ sudo kubectl edit svc -n cilium-monitoring prometheuskoevn@k8s-master-01:~$ sudo kubectl edit svc -n kube-system hubble-uiExecute any of the above commands to display the contents of the

yamlformat file. Change thetype: ClusterIPbelow totype: NodePort. If you cancel, change it back totype: ClusterIP

View the current Services again

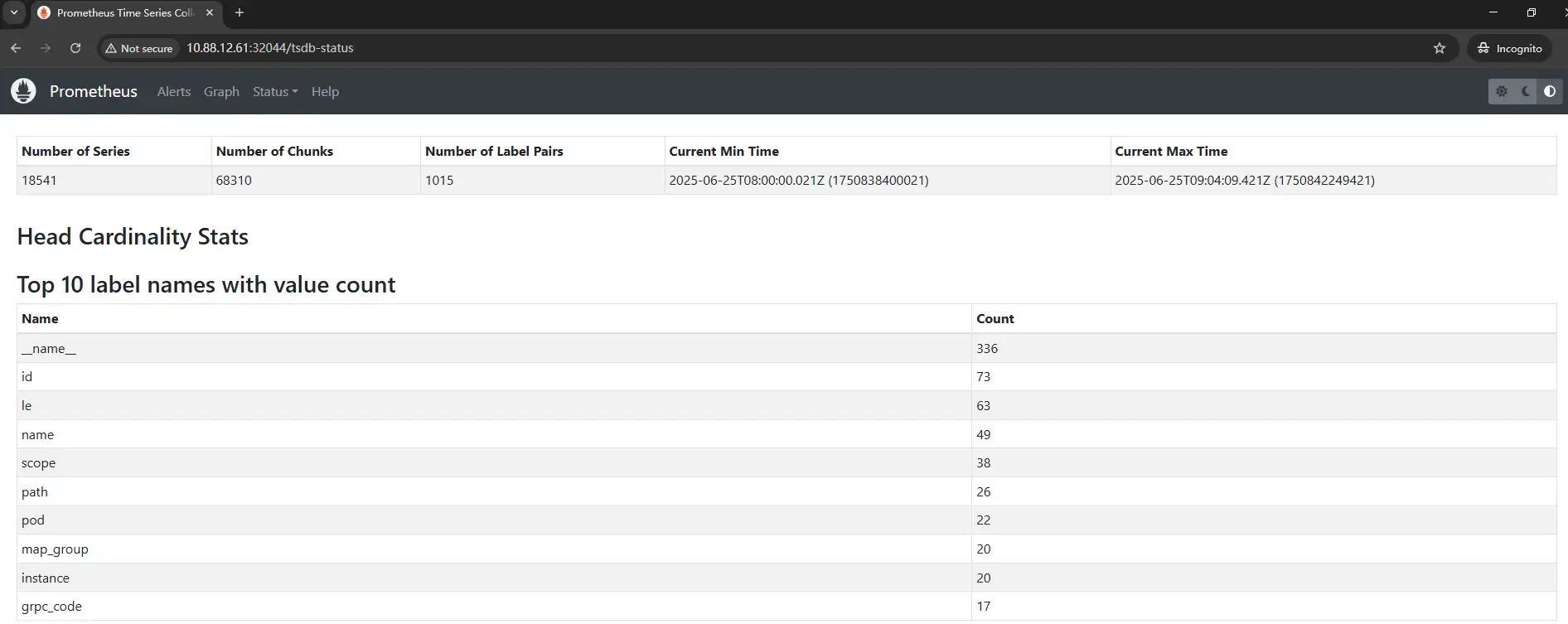

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEcilium-monitoring grafana NodePort 10.90.138.192 <none> 3000:31240/TCP 1dcilium-monitoring prometheus NodePort 10.90.145.89 <none> 9090:32044/TCP 1ddefault kubernetes ClusterIP 10.90.0.1 <none> 443/TCP 1dkube-system cilium-envoy ClusterIP None <none> 9964/TCP 1dkube-system coredns ClusterIP 10.90.0.10 <none> 53/UDP 1dkube-system hubble-metrics ClusterIP None <none> 9965/TCP 1dkube-system hubble-peer ClusterIP 10.90.63.177 <none> 443/TCP 1dkube-system hubble-relay ClusterIP 10.90.157.4 <none> 80/TCP 1dkube-system hubble-ui NodePort 10.90.13.110 <none> 80:32166/TCP 1dkube-system metrics-server ClusterIP 10.90.61.226 <none> 443/TCP 1dBrowser accesses http://10.88.12.61:31240 grafana service

Browser accesses http://10.88.12.61:32044 prometheus service

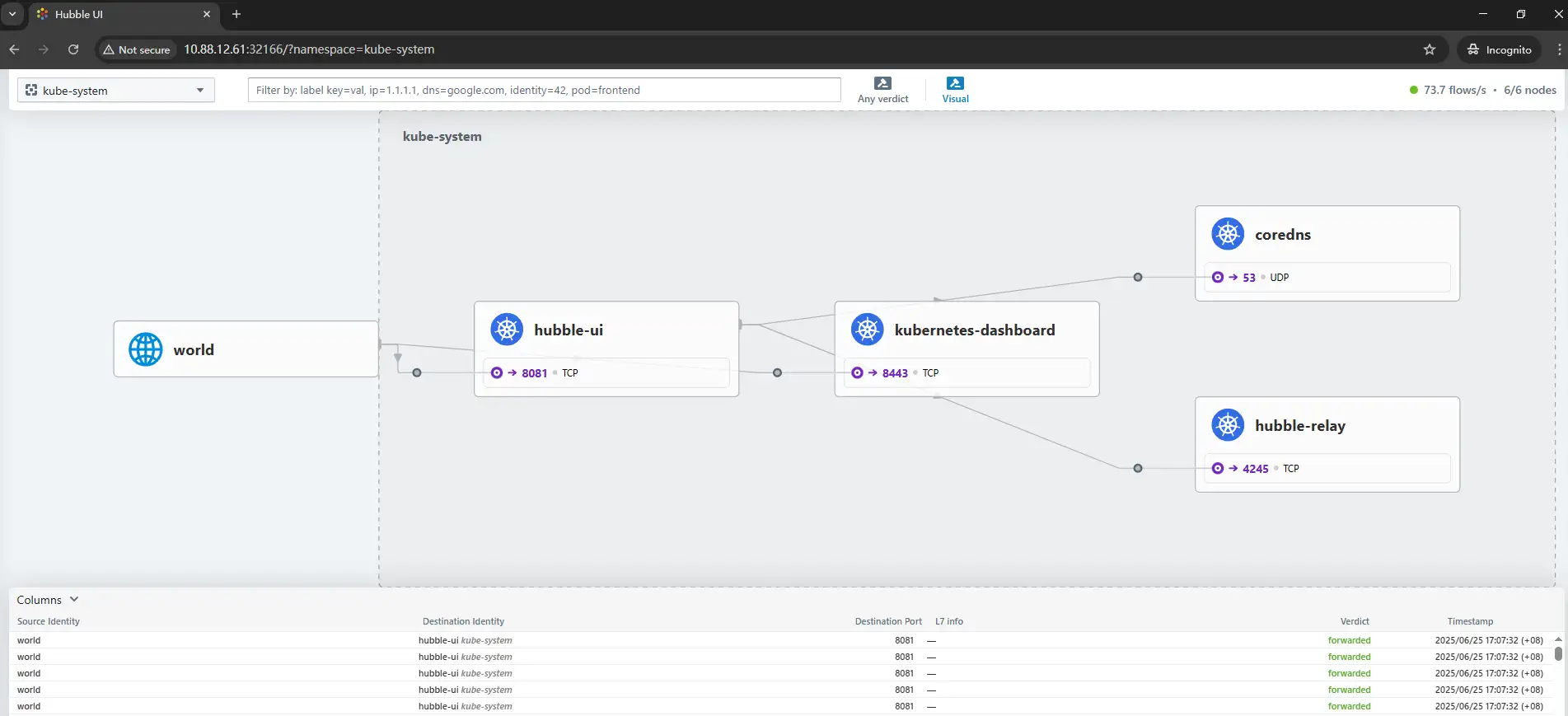

Browser accesses http://10.88.12.61:32166 hubble-ui service

6. Install CoreDNS

6.1.0 Pull the CoreDNS installation package

koevn@k8s-master-01:~$ sudo helm repo add coredns https://coredns.github.io/helmkoevn@k8s-master-01:~$ sudo helm pull coredns/corednskoevn@k8s-master-01:~$ sudo tar xvf coredns-*.tgzkoevn@k8s-master-01:~$ cd coredns/Modify the values.yamlfile

koevn@k8s-master-01:~/coredns$ sudo vim values.yamlservice: clusterIP: "10.90.0.10" # Uncomment and modify the specified IPInstall CoreDNS

koevn@k8s-master-01:~/coredns$ cd ..koevn@k8s-master-01:~$ sudo helm install coredns ./coredns/ -n kube-system \ -f coredns/values.yaml7. Install Metrics Server

Download the metrics-server yaml file

koevn@k8s-master-01:~$ sudo wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml \ -O metrics-server.yamlEdit and modify the metrics-server.yaml file

# Modify the file about 134 lines - args: - --cert-dir=/tmp - --secure-port=10250 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls - --requestheader-client-ca-file=/opt/kubernetes/cert/front-proxy-ca.pem - --requestheader-username-headers=X-Remote-User - --requestheader-group-headers=X-Remote-Group - --requestheader-extra-headers-prefix=X-Remote-Extra-

# Modify the file about 182 lines volumeMounts: - mountPath: /tmp name: tmp-dir - name: ca-ssl mountPath: /opt/kubernetes/cert volumes: - emptyDir: {} name: tmp-dir - name: ca-ssl hostPath: path: /opt/kubernetes/certThen deploy the application

koevn@k8s-master-01:~$ sudo kubectl apply -f metrics-server.yamlView resource status

koevn@k8s-master-01:~$ sudo kubectl top nodeNAME CPU(cores) CPU(%) MEMORY(bytes) MEMORY(%)k8s-master-01 212m 5% 2318Mi 61%k8s-master-02 148m 3% 2180Mi 57%k8s-master-03 178m 4% 2103Mi 55%k8s-worker-01 38m 1% 1377Mi 36%k8s-worker-02 40m 2% 1452Mi 38%k8s-worker-03 32m 1% 1475Mi 39%8. Verifying intra-cluster communication

8.1.0 Deploy a pod resource

koevn@k8s-master-01:~$ cat > busybox.yaml << EOFapiVersion: v1kind: Podmetadata: name: busybox namespace: defaultspec: containers: - name: busybox image: harbor.koevn.com/library/busybox:1.37.0 # Here I use a private warehouse command: - sleep - "3600" imagePullPolicy: IfNotPresent restartPolicy: AlwaysEOFThen execute the deployment pod resources

koevn@k8s-master-01:~$ sudo kubectl apply -f busybox.yamlView pod resources

koevn@k8s-master-01:~$ sudo kubectl get podNAME READY STATUS RESTARTS AGEbusybox 1/1 Running 0 36s8.2.0 Resolving NAMESPACE Services with pod

View Services

koevn@k8s-master-01:~$ sudo kubectl get svc -ANAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEcilium-monitoring grafana NodePort 10.90.138.192 <none> 3000:31240/TCP 1dcilium-monitoring prometheus NodePort 10.90.145.89 <none> 9090:32044/TCP 1ddefault kubernetes ClusterIP 10.90.0.1 <none> 443/TCP 1dkube-system cilium-envoy ClusterIP None <none> 9964/TCP 1dkube-system coredns ClusterIP 10.90.0.10 <none> 53/UDP 1dkube-system hubble-metrics ClusterIP None <none> 9965/TCP 1dkube-system hubble-peer ClusterIP 10.90.63.177 <none> 443/TCP 1dkube-system hubble-relay ClusterIP 10.90.157.4 <none> 80/TCP 1dkube-system hubble-ui NodePort 10.90.13.110 <none> 80:32166/TCP 1dkube-system metrics-server ClusterIP 10.90.61.226 <none> 443/TCP 1dTest analysis

koevn@k8s-master-01:~$ sudo kubectl exec busybox -n default \ -- nslookup hubble-ui.kube-system.svc.cluster.localServer: 10.90.0.10Address: 10.90.0.10:53

Name: hubble-ui.kube-system.svc.cluster.localAddress: 10.90.13.110

koevn@k8s-master-01:~$ sudo kubectl exec busybox -n default \ -- nslookup grafana.cilium-monitoring.svc.cluster.localServer: 10.90.0.10Address: 10.90.0.10:53

Name: grafana.cilium-monitoring.svc.cluster.localAddress: 10.90.138.192

koevn@k8s-master-01:~$ sudo kubectl exec busybox -n default \ -- nslookup kubernetes.default.svc.cluster.localServer: 10.90.0.10Address: 10.90.0.10:53

Name: kubernetes.default.svc.cluster.localAddress: 10.90.0.18.3.0 Each node tests the Kubernetes 443 and CoreDNS 53 ports

koevn@k8s-master-01:~$ sudo telnet 10.90.0.1 443Trying 10.90.0.1...Connected to 10.90.0.1.Escape character is '^]'

koevn@k8s-master-01:~$ sudo nc -zvu 10.90.0.10 5310.90.0.10: inverse host lookup failed: Unknown host(UNKNOWN) [10.90.0.10] 53 (domain) open

koevn@k8s-worker-03:~$ sudo telnet 10.90.0.1 443Trying 10.90.0.1...Connected to 10.90.0.1.Escape character is '^]'

koevn@k8s-worker-03:~$ sudo nc -zvu 10.90.0.10 5310.90.0.10: inverse host lookup failed: Unknown host(UNKNOWN) [10.90.0.10] 53 (domain) open8.4.0 Test the network connectivity between Pods

View pod information

koevn@k8s-master-01:~$ sudo kubectl get pods -A -o \custom-columns="NAMESPACE:.metadata.namespace,STATUS:.status.phase,NAME:.metadata.name,IP:.status.podIP"NAMESPACE STATUS NAME IPcilium-monitoring Running grafana-679cd8bff-w9xm9 10.100.4.249cilium-monitoring Running prometheus-87d4f66f7-6lmrz 10.100.3.238default Running busybox 10.100.4.232kube-system Running cilium-envoy-4sjq5 10.88.12.63kube-system Running cilium-envoy-64694 10.88.12.60kube-system Running cilium-envoy-fzjjw 10.88.12.62kube-system Running cilium-envoy-twtw6 10.88.12.61kube-system Running cilium-envoy-vwstr 10.88.12.65kube-system Running cilium-envoy-whck6 10.88.12.64kube-system Running cilium-fkjcm 10.88.12.63kube-system Running cilium-h75vq 10.88.12.64kube-system Running cilium-hcx4q 10.88.12.61kube-system Running cilium-jz44w 10.88.12.65kube-system Running cilium-operator-58d8755c44-hnwmd 10.88.12.60kube-system Running cilium-operator-58d8755c44-xmg9f 10.88.12.65kube-system Running cilium-qx5mn 10.88.12.62kube-system Running cilium-wqmzc 10.88.12.60kube-system Running coredns-6f44546d75-qnl9d 10.100.0.187kube-system Running hubble-relay-7cd9d88674-2tdcc 10.100.3.64kube-system Running hubble-ui-9f5cdb9bd-8fwsx 10.100.2.214kube-system Running metrics-server-76cb66cbf9-xfbzq 10.100.5.187Enter the busybox pod test network

koevn@k8s-master-01:~$ sudo kubectl exec -ti busybox -- sh/ # ping 10.100.4.249PING 10.100.4.249 (10.100.4.249): 56 data bytes64 bytes from 10.100.4.249: seq=0 ttl=63 time=0.472 ms64 bytes from 10.100.4.249: seq=1 ttl=63 time=0.063 ms64 bytes from 10.100.4.249: seq=2 ttl=63 time=0.064 ms64 bytes from 10.100.4.249: seq=3 ttl=63 time=0.062 ms

/ # ping 10.88.12.63PING 10.88.12.63 (10.88.12.63): 56 data bytes64 bytes from 10.88.12.63: seq=0 ttl=62 time=0.352 ms64 bytes from 10.88.12.63: seq=1 ttl=62 time=0.358 ms64 bytes from 10.88.12.63: seq=2 ttl=62 time=0.405 ms64 bytes from 10.88.12.63: seq=3 ttl=62 time=0.314 ms9. Install dashboard

9.1.0 Pull the dashboard installation package

koevn@k8s-master-01:~$ sudo helm repo add kubernetes-dashboard \https://kubernetes.github.io/dashboard/

koevn@k8s-master-01:~$ sudo helm search repo \kubernetes-dashboard/kubernetes-dashboard --versions # View supported versionsNAME CHART VERSION APP VERSION DESCRIPTIONkubernetes-dashboard/kubernetes-dashboard 7.0.0 General-purpose web UI for Kubernetes clusterskubernetes-dashboard/kubernetes-dashboard 6.0.8 v2.7.0 General-purpose web UI for Kubernetes clusterskubernetes-dashboard/kubernetes-dashboard 6.0.7 v2.7.0 General-purpose web UI for Kubernetes clusters

koevn@k8s-master-01:~$ sudo helm pull kubernetes-dashboard/kubernetes-dashboard --version 6.0.8 # Specifying a versionkoevn@k8s-master-01:~$ sudo tar xvf kubernetes-dashboard-*.tgzkoevn@k8s-master-01:~$ cd kubernetes-dashboardEdit and modify the values.yaml file

image: repository: harbor.koevn.com/library/kubernetesui/dashboard # Here I use a private warehouse tag: "v2.7.0" # The default is empty, specify the version yourself9.2.0 Deploy dashboard

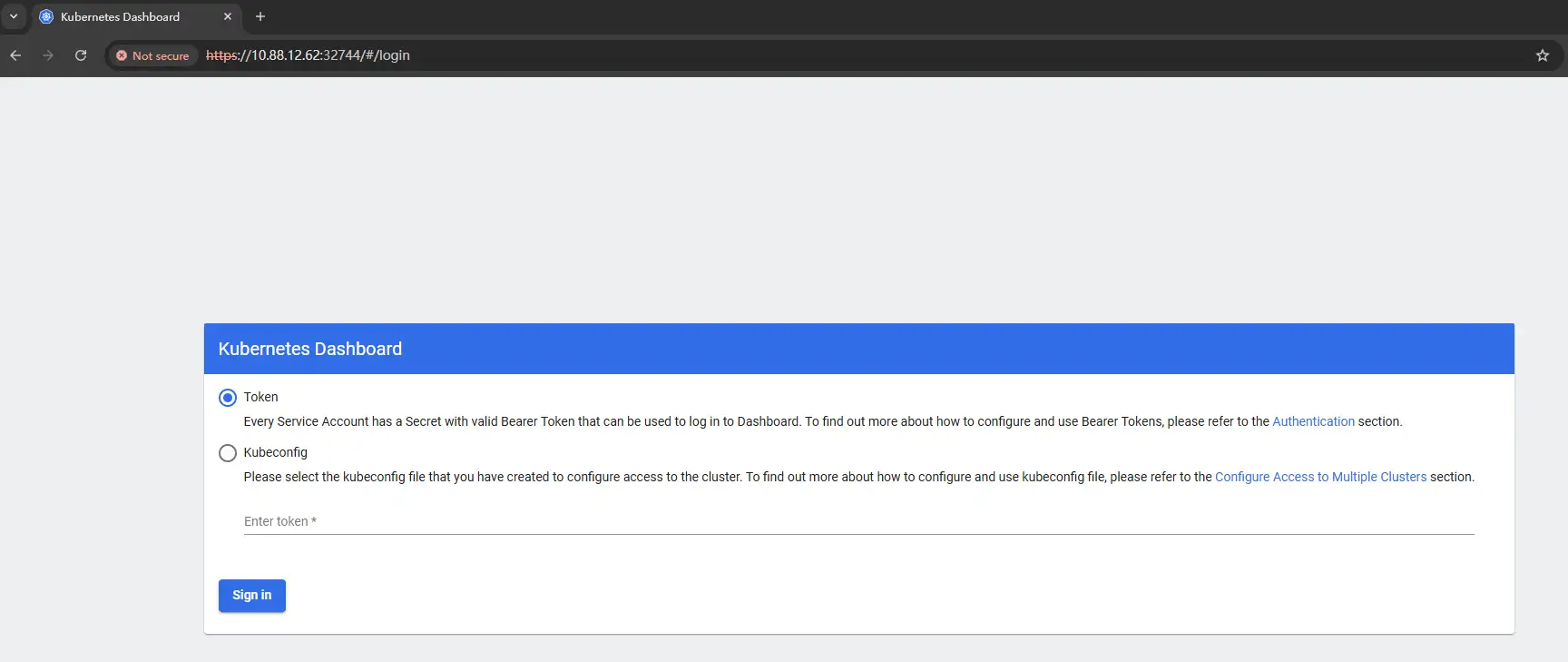

koevn@k8s-master-01:~/kubernetes-dashboard$ cd ..helm install dashboard kubernetes-dashboard/kubernetes-dashboard \ --version 6.0.8 \ --namespace kubernetes-dashboard \ --create-namespace9.3.0 Temp token

koevn@k8s-master-01:~$ cat > dashboard-user.yaml << EOFapiVersion: v1kind: ServiceAccountmetadata: name: admin-user namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: admin-userroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-adminsubjects:- kind: ServiceAccount name: admin-user namespace: kube-systemEOFExecute the application

koevn@k8s-master-01:~$ sudo kubectl apply -f dashboard-user.yamlCreate a temporary token

koevn@k8s-master-01:~$ sudo kubectl -n kube-system create token admin-usereyJhbGciOiJSUzI1NiIsImtpZCI6IjRkV3lkZTU1dF9mazczemwwaUdxUElPWDZhejFyaDh2ZzRhZmN5RlN3T0EifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzUwOTExMzc1LCJpYXQiOjE3NTA5MDc3NzUsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiMmQwZmViMzItNDJlNy00ODE0LWJmYjUtOGU5MTFhNWZhZDM2Iiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiZmEwZGFhNjAtNWEzNC00NTFjLWFiYmUtNTQ2Y2EwOWVkNWQyIn19LCJuYmYiOjE3NTA5MDc3NzUsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.XbF8s70UTzrOnf8TrkyC7-3vdxLKQU1aQiCRGgRWyzh4e-A0uFgjVfQ17IrFsZEOVpE8H9ydNc3dZbP81apnGegeFZ42J7KmUkSUJnh5UbiKjmfWwK9ysoP-bba5nnq1uWB_iFR6r4vr6Q_B4-YyAn1DVy70VNaHrfyakyvpJ69L-5eH2jHXn68uizXdi4brf2YEAwDlmBWufeQqtPx7pdnF5HNMyt56oxQb2S2gNrgwLvb8WV2cIKE3DvjQYfcQeaufWK3gn0y-2h5-3z3r4084vHrXAYJRkPmOKy7Fh-DZ8t1g7icNfDRg4geI48WrMH2vOk3E_cpjRxS7dC5P9A9.4.0 Creating a long-term token

koevn@k8s-master-01:~$ cat > dashboard-user-token.yaml << EOFapiVersion: v1kind: Secretmetadata: name: admin-user namespace: kube-system annotations: kubernetes.io/service-account.name: "admin-user"type: kubernetes.io/service-account-tokenEOFExecute the application

koevn@k8s-master-01:~$ sudo kubectl apply -f dashboard-user-token.yamlView token

koevn@k8s-master-01:~$ sudo kubectl get secret admin-user -n kube-system -o jsonpath={".data.token"} | base64 -deyJhbGciOiJSUzI1NiIsImtpZCI6IjRkV3lkZTU1dF9mazczemwwaUdxUElPWDZhejFyaDh2ZzRhZmN5RlN3T0EifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzUwOTExNzM5LCJpYXQiOjE3NTA5MDgxMzksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiMmZmNTE5NGEtOTlkMC00MDJmLTljNWUtMGQxOTMyZDkxNjgwIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiZmEwZGFhNjAtNWEzNC00NTFjLWFiYmUtNTQ2Y2EwOWVkNWQyIn19LCJuYmYiOjE3NTA5MDgxMzksInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.LJa37eblpk_OBWGqRgX2f_aCMiCrrpjY57dW8bskKUWu7ldLhgR6JIbICI0NVnvX4by3RX9v_FGnAPwU821VDp05oYT1KcTDXV1BC57G4QGL4kS9tBOrmRyXY0jxB8ETRmGx8ECiCJqNfrVdT99dm8oaFqJx1zq6jut70UwhxQCIh7C-QVqg6Gybbb3a9x25M2YvVHWStduN_swMOQxyQDBRtA0ARAyClu73o36pDCs_a56GizGspA4bvHpHPT-_y1i3EkeVjMsEl6JQ0PeJNQiM4fBvtJ2I_0kqoEHNMRYzZXEQNETXF9vqjkiEg7XBlKe1L2Ke1-xwK5ZBKFnPOg9.5.0 Login dashboard

Edit dashboard servers

koevn@k8s-master-01:~$ sudo kubectl edit svc -n kube-system kubernetes-dashboard type: NodePortView the mapped ports

koevn@k8s-master-01:~$ sudo kubectl get svc kubernetes-dashboard -n kube-systemNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes-dashboard NodePort 10.90.211.135 <none> 443:32744/TCP 5dBrowser access https://10.88.12.62:32744